Crossed Lamps

Last weekend we bought two Rodd lamps at Ikea for the guest room, and it struck me how amused I'd be if each one switched the other. Six hours and a few new parts later, and it came out pretty well:

The remote action is especially jarring because the switches are right next to the bulbs they would normally control:

Amazon S3 as Append Only Datastore

As a hack, when I need an append-only datastore with no authentication or validation, I use Amazon S3. S3 is usually a read-only service from the unauthenticated web client's point of view, but if you enable access logging to a bucket you get full-query-parameter URLs recorded in a text file for GETs that can come from a form's action or via XHR.

There aren't a lot of internet-safe append-only datastores out there. All my favorite noSQL solutions divide permissions into read and/or write, where write includes delete. SQL databases let you grant an account insert without update or delete, but still none suggest letting them listen on a port that's open to the world.

This is a bummer because there are plenty of use cases when you want unauthenticated client-side code to add entries to a datastore, but not read or modify them: analytics gathering, polls, guest books, etc. Instead you end up with a bit of server side code that does little more than relay the insert to the datastore using over-privileged authentication credentials that you couldn't put in the client.

To play with this, first, create a file named vote.json in a bucket with contents like {"recorded": true}, make it world readable, set Cache-Control to max-age=0,no-cache and Content-Type to application/json. Now when a browser does a GET to that file's https URL, which looks like a real API endpoint, there's a record in the bucket's log that looks something like:

aaceee29e646cc912a0c2052aaceee29e646cc912a0c2052aaceee29e646cc91 bucketname [31/Jan/2013:18:37:13 +0000] 96.126.104.189 - 289335FAF3AD11B1 REST.GET.OBJECT vote.json "GET /bucketname/vote.json?arg=val&arg2=val2 HTTP/1.1" 200 - 12 12 9 8 "-" "lwp-request/6.03 libwww-perl/6.03" -

The full format is described by Amazon, but with client IP and user agent you have enough data for basic ballot box stuffing detection, and you can parse and tally the query arguments with two lines of your favorite scripting language.

This scheme is especially great for analytics gathering because and one never has to worry about full log on disks, load balancers, backed up queues, or unresponsive data collection servers. When you're ready to process the data it's already on S3 near EC2, AWS Data Pipeline or Elastic MapReduce. Plus, S3 has better uptime than anything else Amazon offers, so even if your app is down you're probably recording the failed usage attempts.

Creating Burn Down Charts for GitHub Repositories Using Google Apps Script

At DramaFever I got folks to buy into to burn down charts as the daily display for our weekly sprints with the rotating release person being responsible for updating a Google spreadsheet with each day's end-of-day open and closed issue counts.

It works fine, and it's only a small time burden, but if one forgets there's no good way to get the previous day's counts. Naturally I looked to automation, and GitHub has an excellent API. My usual take on something like this would be to have cron trigger a script that:

- polls the GitHub API

- extracts the counts

- writes them to some data store

- builds today's chart from the historical data in the datastore

That could be easily done in bash, Python, or Perl, but it's the sort of thing that's inevitably brittle. It's too ad hoc to run on a prod server, so it'll run from a dev shell box, which will be down, or disk full, or rebuilt w/o cron migration or any of the million other ailments that befall non-prod servers.

Looking for something different I tried Google Apps Script for the first time, and it came out very well.

GitHub Jenkins Deploy Keys Config

GitHub doesn't let you use the same deploy key for multiple repositories within a single organziation, so you have to either (a) manage multiple keys, (b) create a non-human user (boo!), or (c) use their not-yet-ready for primetime HTTP OAUTH deploy access, which can't create read-only permissions.

In the past to managee the multiple keys I've either (a) used ssh-agent or (b) specified which private key to use for each request using -i on the command line, but neither of those are convenient with Jenkins.

Today I finally thought about it a little harder and figured out I could use fake hostnames that map to the right keys within the .ssh/config file for the Jenkins user. To make it work I put stanzas like this in the config file:

Host repo_name.github.com

Hostname github.com

HostKeyAlias github.com

IdentityFile ~jenkins/keys/repo_name_deploy

Then in the Jenkins GitHub Plugin config I set the repository URL as:

git@repo_name.github.com:ry4an/repo_name.git

There is no host repo_name.github.com and it wouldn't resolve in DNS, but that's okay because the .ssh/config tells ssh to actually go to github.com, but we do get the right key.

Maybe this is obvious and everyone's doing it, but I found it the least-hassle way to maintain the accounts-for-people-only rule along with the separate-keys-for-separate-repos rule and without running the ssh-agent daemon for a non-login shell.

spdyproxy on Ubuntu 12.4 LTS

I'm often on unencrypted wireless networks, and I don't always trust everyone on the encrypted ones, so I routinely run a SOCKS proxy to tunnel my web traffic through an encrypted SSH tunnel. This works great, but I have to start the SSH tunnel before I start browsing -- that's okay IRC before reader -- but when I sleep the laptop the SSH tunnel dies and requires a restart before I can browse again. In the past I've used autossh to automate that reconnect, but it still requires more attention than it deserves.

So I was excited when I saw Ilya Grigorik's writeup on spdyproxy. With SPDY multiple independent bi-directional connections are multiplexed through a single TCP connection, in the same way some of us tried to do with web-mux in the late 90s (I've got a Java 1.1 implementation somwhere). SPDY connections can be encrypted, so when making a SPDY connection to a HTTP proxy you're getting an encrypted tunnel though which your HTTP connections to anywhere can be tunneled, and probably getting a TCP setup/teardown speed boost as well. Ilya's excellent node-spdyproxy handles the server side of that setup admirably and the rest of this writeup covers my getting it running (with production accouterments) on an Ubuntu 12.4(.1) LTS system.

With the below setup in place I can use a proxy.pac to make sure my browser never sends an unencrypted byte through whatever network I'm not -- DNS looksups excluded, you still needs SOCKSv4a or SOCKSv5 to hide those.

Getting Chef Solo Working With the Database Cookbook and Vagrant

This is going to be one big jargon laden blob if you're not trying to do exactly what I was trying to do this week, but hopefully it turns up in a search for the next person.

I'm setting up a new development environment using Vagrant and I'm using Chef Solo to provision the Ubuntu (12.4 Precise Penguin) guest. I wanted MySQL server running and wanted to use the database cookbook from Opscode to pre-create a database.

My run list looked like:

recipe[mysql::server], recipe[webapp]

Where the webapp recipe's metadata.rb included:

depends "database"

and the default recipe led off with:

mysql_database 'master' do connection my_connection action :create end

Which would blow up at execution time with the message "missing gem 'mysql'", which was really frustrating because the gem was already installed in the previous mysql::server recipe.

I learned about chef_gem as compared to gem_package from the chef docs, and found another page the showed how to run actions at compile time instead of during chef processing, but only when I did both like this:

%w{mysql-client libmysqlclient-dev make}.each do |pack|

package pack do

action :nothing

end.run_action(:install)

end

g = chef_gem "mysql" do

action :nothing

end

g.run_action(:install)

was I able to get things working. Notice that I had to install the OS packages for mysql, the mysql development headers, and the venerable make because I could early install the mysql ruby gem into chef's gem path -- which with Vagrant's chef solo provisioner is entirely separate from any system gems.

Android Fragmentation Analogy

This whole post should just be a single tweet, but somehow it doesn't feel safe to say anything comparing Android and iOS development, even implicitly, without five paragraphs full of disclaimers. First, here's the tweet:

Developers complaining about fragmentation on the Android platform are like fashion designers complaining about size fragmentation on the human body.

Expanding on that I'd say, yes, it's easier to make something that fits beautifully if you know the exact size and shape you're targeting and don't have to think about how it will look on other form factors / body shapes. Some designers create clothes for only the fashion model body shape, and some create clothes that fit a wider variety of body shapes.

An average Android application that doesn't do anything particularly out of the norm visually and avoids undocumented behaviors, does just fine on any device at its target version or later. It might not be visually stunning, but it's worth noting that "just fine" is always available, and plenty of great apps have taken it.

On the other hand, developers that reach for visual excellence on the Android platform do have a hard row to hoe, in the same way that making clothing that's flattering on all body shapes is very hard. Things have to be extensively tweaked and tested. If you're building Glipboard or a game engine on Android, then I totally get that the variety of resolutions, form factors, and input options makes your job harder.

To be clear, I'm not drawing any analogies between iOS devices and fashion models excepting the uniformity of shape/form factor, and I'm not saying anything particularly negative about designers that stop at size two or developers that only do iOS.

I'm just saying that Android fragmentation isn't inherently a problem, and that while it makes stunningly beautiful applications harder, it doesn't make the basic "getting stuff done" application that represents 99% of both the iOS and Android app stores any harder. Most iOS and Android apps are just using the usual controls in the usual way, and wouldn't be affected by Android fragmentation in any meaningful way.

Low Flow Shower Delay

When I start up the shower it's the wrong temperature and adjusting it to the right temperature takes longer in this apartment than it has in any home in which I've previously lived. I wanted to blame the problem on the low flow shower head, but I'm having a hard time doing it. My thinking was that the time delay from when I adjust the shower to when I actually feel the change is unusually high due to the shower head's reduced flow rate.

In setting out to measure that delay I first found the flow rate in liters per second for the shower. I did this three times using a bucket and a stopwatch finding the shower filled 1.5 qt in 12 s, or 1.875 gpm, or 0.118 liters per second. A low flow shower head, according to the US EPA's WaterSense program, is under 2.0 gpm, so that's right on target.

That let me know how quickly the "wrong temperature" water leaves the pipe, so next to see how much of it there is. From the hot-cold mixer to the shower head there's 65 inches of nominal 1/2" pipe, which has an inner diameter of 0.545 inches. The volume of a cylinder (I'm ignoring the curve of the shower arm) is just pi times radius squared times length:

Converting to normal units gives 1.3843 centimeters diameter and 165.1 centimeters length, which yeilds 248 cm2, or 0.248 liters, of wrong temperature water to wait through until I get to sample the new mix.

With a flow rate of 0.118 liters per second and 0.248 liters of unpleasant water I should be feeling the new mix in 2.1 seconds. I recognize that time drags when you're being scalded, but it still feels like longer than that.

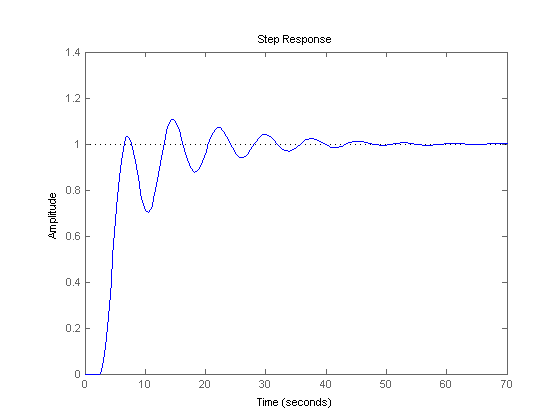

I've done some casual reading about linear shift time delays in feedback-based control systems and the oscillating converge they show certainly aligns with the too-hot then too-cold feel of getting this shower right. This graph is swiped from MathWorks and shows a closed loop step response with a 2.5 second delay:

That shows about 40 seconds to finally home-in on the right temperature and doesn't include a failure-prone, over-correcting human. I'm still not convinced the delay is the entirety of the problem, but it does seem to be a contributing factor. Some other factors that may affect perception of how show this shower is to get right are:

- non-linearity in the mixer

- water in the shower head itself

- water already "in flight" having left the head but not yet having hit me

Mercifully, the water temperature is consistent -- once you get it dialed in correctly it stays correct, even though a long shower.

I guess the next step is to get out a thermometer and both try to characterize the linearity of the mixing control and to try to measure rate of change in the temperature as related to the magnitude of the adjustment.

Update: I got a ChemE friend to weigh in with some help.

OS X Linux Clipboard Sharing

My primary home machine is a Linux deskop, and my primary work machine is an OSX laptop. I do most of my work on the Linux box, ssh-ed into the OS X machine -- I recognize that's the reverse of usual setups, but I love the awesome window manager and the copy-on-select X Window selection scheme.

My frustration is in having separate copy and paste buffers across the two systems. If I select something in a work email, I often want to paste it into the Linux machine. Similarly if I copy an error from a Linux console I need to paste it into a work email.

There are a lot of ways to unify clipboards across machines, but they're all either full-scale mouse and keyboard sharing, single-platform, or GUI tools.

Finding the excellent xsel tool, I cooked up some command lines that would let me shuttle strings between the Linux selection buffer and the OS X system via ssh.

I put them into the Lua script that is the shortcut configuration for awesome and now I can move selections back and forth. I also added some shortcuts for moving text between the Linux selection (copy-on-select) and clipboard (copy-on-keypress) clipboard.

-- Used to shuttle selection to/from mac clipboard

select_to_mac = "bash -c '/usr/bin/xsel --output | ssh mac pbcopy'"

mac_to_select = "bash -c 'ssh mac pbpaste | /usr/bin/xsel --input'"

-- Used to shuttle between selection and clipboard

select_to_clip = "bash -c '/usr/bin/xsel --output | /usr/bin/xsel --input --clipboard'"

clip_to_select = "bash -c '/usr/bin/xsel --output --clipboard | /usr/bin/xsel --input'"

awful.key({ modkey, }, "c", function () awful.util.spawn(mac_to_select) end),

awful.key({ modkey, }, "v", function () awful.util.spawn(select_to_mac) end),

awful.key({ modkey, "Shift" }, "c", function () awful.util.spawn(clip_to_select) end),

awful.key({ modkey, "Shift" }, "v", function () awful.util.spawn(select_to_clip) end),

NFC PayPass Rick Roll

NFC tags are tiny wireless storage devices, with very thin antennas, attached to poker chip sized stickers. They're sort of like RFID tags, but they only have a 1 inch range, come in various capacities, and can be easily rewritten. If the next iPhone adds a NFC reader I think they'll be huge. As it is they're already pretty fun and only a buck each even when bought in small quantities.

Marketers haven't figured it out yet, but no one wants to scan QR barcodes. The hassle with QR codes is that you have to fire up a special barcode reader application and then carefully focus on the barcode with your phone's camera. NFC tags have neither of those problems. If your phone is awake it's "looking for" NFC tags, and there's no aiming involved -- just tap your phone on the tag.

A NFC tag stores a small amount of data that's made available to your phone when tapped. It can't make you phone do anything your phone doesn't already do, and your phone shouldn't be willing to do anything destructive or irreversible (send money) without asking for your confirmation.

People are using NFC tags to turn on bluetooth when their phone is placed in a stickered cup-holder, to turn off their email notification checking when their phone is placed on their nightstand, and to bring up their calculator when their phone is placed on their desk. Most anything your phone can do can be triggered by a NFC tag.

NFC tags are already in use for transit passes, access keys, and payment systems. I can go to any of a number of businesses near my home and make a purchase by tapping my phone against the Master Card PayPass logo on the card scanner. My phone will then ask for a pin and ask me to confirm the purchase price which is deducted from my Google Wallet or charged to my Citi MasterCard.

I'm still batting around ideas for a first NFC project, maybe a geocaching / scavenger-hunt-like trail of tags with clues, but meanwhile I made some fake Master Card PayPass labels that are decidedly more fun:

Keep in mind that phone has absolutely no non-standard software or settings on it. Any NFC-reader equipped phone that touches that tag will be rick rolled. Now to get a few of those out on local merchants' existing credit card readers.

Tags

- funny

- java

- people

- python

- mongodb

- scala

- perl

- meta

- mercurial

- home

- security

- ideas-built

- ideas-unbuilt

- software

Contact

Content License

This work is licensed under a

Creative Commons Attribution-NonCommercial 3.0 Generic License.