Approval Voting for the ACM

Approval voting is an alternate voting system that has many benefits as compared to Instant Run-Off Voting. For years I've been running the on-line officer elections for the local campus chapter of the Association of Computing Machinery. Last year I talked them into switching to approval voting (even though it probably violates their charter), and it worked really well. Their elections have kicked off again, and once again I'm hosting them and using my voting script.

SwarmStream Article on the O'Reilly Network

I wrote an article that got posted on the O'Reilly Network. It sounds a little more huckster-ish than I'd like, but the tech does get explained pretty well. There's a link to the new beta 2 release of SwarmStream Public Edition at the bottom of the article.

Detecting Recently Used Words On the Fly

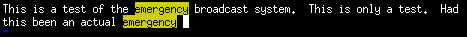

When writing I frequently find myself searching backward, either visually or using a reverse-find, to see if I've previously used the word that I've just used. Some words, say furthermore for example, just can't show up more than once per paragraph or two without looking overused.

I was thinking that if my editor/word-processor had a feature wherein all instances of the word I just typed were briefly highlighted it would allow me to notice awkward repeats without having to actively watch for them. Nothing terribly intrusive, mind you, but just a quick flicker of highlight while I type.

A little time spent figuring out key bindings in vim, my editor of choice, left me with this ugly looking command:

inoremap <space> <esc>"zyiw:let @/=@z<cr>`.a<space>

As a proof-of-concept, it works pretty much exactly how I described, though it breaks down a bit around punctuation and double spaces. I'm sure someone with stronger vim-fu could iron out the kinks, but it's good enough for me for now. Here's a mini-screenshot of the highlighting in action.

I'm sure the makers of real word processors, like open office, could add such a feature without much work, but maybe no one but me would ever use it.

Obscuring MoinMoin Wiki Referrers

When you click on a link in your browser to go to a new web page your browser sends along a Referrer: header, which tells the owner of the site that's been linked to the URL of the site where the link was found. It's a nice little feature that helps website creators know who is linking to them. Referrer headers are easily faked or disabled, but in general most people don't bother, because there's generally no harm in telling a website owner who told you about their site.

However, there are cases where you don't want the owner of the link target to know who has linked to them. We've run into one of these where I work because one of our internal websites is a wiki. One feature of wikis is that the URLs tend to be very descriptive. Pages leaving addresses like http://wiki.internal/ProspectivePartners/ in the Referrer: header might give away more information than we want showing up in someone else's logs.

The usual way to muffle the outbound referrer information from the linking website is to route the user's browser through a redirect. I installed a simple redirect script and figured out I could get MoinMoin, our wiki software of choice, to route all external links through it by inserting this into the moin_config.py file:

url_mappings = {

'http://': 'http://internal/redirect/?http://',

'https://': 'http://interal/redirect/?https://'

}

Now the targets of the links in our internal wiki only see '-' in their referrer logs, and no code changes were necessary.

Comments

I'm working on installing a some what sensitive wiki, so this is interesting.

How does the redirect script remove the referer, though? I couldn't figure that out from the script.

It just does due to the nature of the Referrer: header. When going a GET a browser provides the name of the page where the clicked link was found. When a link on page A points to a redirection script, B, then the browser tells B that the referrer was A. Then the redirect script, B, tells the browser to go to page C -- redirects it. When the browser goes to page C, the real target page, it doesn't send a Referrer: header because it's not following a link -- it's following a redirect. So the site owner of C never sees page A in the redirect logs. S/he doesn't see the address of B in those logs either, because browsers just don't send a Referrer header: at all on redirects. -- Ry4an

There was a little more talk about this on the moin moin general mailing list, including my proposal for adding redirect-driven masking as a configurable moin option. -- Ry4an

Ringback Tones Made Less Evil

Foreign cell phone services have had a feature for awhile called Ringback Tones which allows you replace the normal ringing sound that callers hear while they're waiting for you to answer with a short audio clip. This isn't the annoying ring that the people near you hear until you answer your phone, but the even more annoying ring that the people calling you will hear directly in their ear. The feature has come to the US recently, and my cell phone provider, T-Mobile, calls its offering Caller Tunes.

When I first heard about this impending nightmare my initial thought was, "Anyone I call with this feature will have their number removed from my phone immediately." I'm still dreading calling a (soon to be former) friend and hearing the sort of music that sends me diving for a stereo's power cord, but now that I look into the feature more I'm thinking of getting it myself.

As I've discovered from T-Mobile's on-line flash demo you can actually overlay your voice atop whichever audio clip is playing as a ringback. Assuming they have a reasonably normal ringing sound as one of the clips I could select that and overlay it with my voice explaining to people that in lieu of a phone call I'd much rather get an email or a text message. Now that, would be great. Additionally, caller tunes allows you to set different ringbacks for different times of day and for different callers, which would allow me to omit the snarky message during work hours and for callers whom I know will never text anyone (Hi, mom).

It's still probably not worth doing, but damn is it tempting.

Designing a Beer Temperature Experiment

I've repeatedly encountered the statement, always presented as fact, that if you chill beer, let it return to room temperature, and then chill it again you will have affected in it a degradation of quality. This has always seemed like nonsense to me for a few different reasons, chief among them that surely this chill/warm cycle happened repeatedly during transport and retail.

As a beer snob, I generally drink beers imported from Europe. These are shipped to the US in huge container ships across the icy North Atlantic. They're then shipped in semi trucks to Minnesota. Next they're stored at distribution centers, in retail warehouses, and on the sales floor (or in the beer cooler) Surely in one season or another the temperature variance during those many legs and stops constitutes at least one cooling/warming cycle.

I'd like to test the theory, but I'm still trying to figure out just how to organize the experiment. Some things I know that have to be included or controlled are:

- temperature

- four "life cycles"

- bought cold (and kept that way)

- bought warm and cooled once

- bought cold, then warmed, and then cooled

- bought warm, cooled, warmed, and re-cooled

- all must be served at the same final temperature

- tasters

- can't know which is which in advance

- don't need to know or like beer

- beer variety:

- type

- ale

- lager

- pilsner

- stout

- location of origin

- imported

- domestic (coastal)

- domestic (local)

What I'm up in the air about is exactly how to have the tasters provide their data. Giving them identical beers which have gone through each of the four life cycles and asking them to rate them one through ten would be just about the worst way possible. That's just inviting people to rate and invent differences when the goal is to determine if there's a difference at all. Of course, even with that terrible mechanism enough repetitions would weed that sort of noise out of the data, but who has that much time.

Currently I'm leaning toward a construct wherein tasters are given two small cups of beer and are simply asked if they're the same or different. Percentage correct on a simple binary test like that would lead itself well to easy statistical analysis. Making it double-blind random and other procedural niceties could be done easily using only two testers. There should be no problem finding ample tasters.

What are the things I'm not considering?

Comments Some disussion from IRC:

<Joe> ry4an: thoughts on your beer experiment: is it you intention to test each beer variety one at a time, or mix 'n match? <Joe> seems to me that you'd get better data on the effects of temperature change by testing them one at a time <Joe> also you should test them kind of like an eye doctor tests lenses: A or B? ok now B or C? ok now C or D? and so on <Joe> the tasters should be posed this question: "can you determine a difference between these two samples? If so which one tastes 'fresher'?" <Ry4an> Joe: definitely one variety at a time. ANd I agree it's a two at a time test <Ry4an> I won't, however, be asking about fresher even. <Ry4an> that just adds unneeded data -- I jsut wantt "Different or not" <Ry4an> and 1/2 of the tests will likely be with the same beer in both cups

More talk later:

<Vane> ry4an: you should give them three small cups of beer <Vane> ry4an: one original, one heated & cooled, and one a completely different beer :) <Vane> ry4an: ask them to compare all three and rate how close they are to each other <Ry4an> vane: I don't think that's sound. People innately want to rate, but it doesn't give good data. <Louis> I like the better/worse/same approach <Ry4an> enough tests of rating woudl overcome the invented differenced people create, but I think I can get better accuracy in fewer tests if I don't invite in the imagined rating/ranking directly <Ry4an> better/worse is not the question to ask. people invent differences when ranking that they don't when answering booleans <Ry4an> ranking gets you more data but it's got more "noise" <Louis> hmm, isn't better/worse a boolean? 1 = better, 0 = worse... <Louis> I may have to re-read the test again though <Louis> the details are fuzzy at best <Ry4an> Louis: no, and that pefectly highlyts the problem. it's better/worse/same, but no one ever says 'same' because their brain demands a difference <Ry4an> and when the whole point of the test is "does it make a difference" the same is *more* important than better/worse but when you ask better/worse/same no one considers same equally <Vane> ry4an: i just think you should have a 'control' beer that is obviously different <Vane> ry4an: i guess not obviously, but different <Ry4an> vane: the control is that 1/2 of all the tests will be w/ identical beer <Ry4an> 'identical' is the only absolute one can find with which to control <Ry4an> different has an unquantifiable magnitude and thus isn't really a control <Vane> ry4an: you can do 'identical' and not 'identical' as control <Ry4an> vane: identical is the control, and different is the variable <Ry4an> For example w/ heating cycle A, B, C, and D. YOu might have tests like AA, AB, AA, AC, AD, AA <Ry4an> and you expect to hear 'same' the majority of the time on the AA pairing as your control and you compare that to how many times you hear same on the AB, AC, AD tests <Vane> you are really testing human perception, the control would be to verify human can actualy tell whether something is identical or not identical <Ry4an> vane: that's exactly what I'm saying (and you're not suggesting w/ your grossly different beer as "control") <Vane> if they can 90% of the time, then you can be assured that 90% your results with the real test is accurate <Ry4an> right, so for your control you need actual identical because it's the only absolute you have in a non-quantifiable test <Vane> not-identical is an absolute <Vane> if someone thinks all beer tastes the same, they just might always vote identical <Ry4an> but it's not really. even identical isn't perfectly absolute but it's the closest you can get <Ry4an> testing A vs A *no one* should be able to find a difference and if they do you know it's ivented <Vane> i for one, wouldn't be a good person to take the test, because I am not a beer conniseur <Ry4an> testing A vs Z you have no way of knowing what spercentage of the popular should be able to detect that difference, but you can't assume it's 100% even if Z is motor oil <Vane> i might just say they are close enough... <Ry4an> vane: actually I think non beer drinkers would be better <Ry4an> "close enough" is the sort of inexactness you're trying to eliminate in a test -- you don't invite it in by using a control that relies on "different enough" <Vane> i think non-beer drinkers would be worse, cause they wouldn't take the time necessary to savor/taste <Ry4an> that's why same/different is better than worse/better. basic pride will have even a non-beer drinker trying to be the person who most often got 'same' right on the controls whether they like beer or not <Ry4an> I suspect that Louis (a beer hater) will try very hard to guess which times he's <Ry4an> got identical peers even if it means f <Louis> ah, yeah same/diff that's right <Louis> I don't hate beer, I just can't stand the taste of the vile liquid <Ry4an> heh <Vane> so basically shad would always vote they were the same, because they are all vile

Later yet Jenni Momsen and I exchanged some emails on the subject:

On Wed, Mar 02, 2005 at 02:47:32PM -0500, Jennifer Momsen wrote: > > On Mar 2, 2005, at 2:08 PM, Ry4an Brase wrote: > > > On Wed, Mar 02, 2005 at 01:48:49PM -0500, Jennifer Momsen wrote: > > > I read your experimental set-up a while back, and forgot to tell > > > you what I thought. Namely, I think you will find your hypothesis > > > (it's not a theory, yet) not supported by your experiment. > > > Temperature is probably critical to beer quality (I'm thinking of > > > the ideal gas law, here - Eric has some other ideas as to why > > > temperature is probably important). In any case, your experimental > > > design could be improved. > > > > They're all to be served at the same temperature, it's just > > temperatures through which they pass that I'm wondering about. > > What's more, what I'm really wondering is if the temperatures > > through which they pass after I purchase them matter given all the > > temperatures through which they likely passed before I got a crack > > at them. I agree it's possible that keeping it within a certain > > temperature range for all of its life may yield a better drinking > > beer, but I also suspect that what damage can be done has already > > been done during shipping. > > Yes, this was clear. I think temperature is of such importance that > when shipping, manufacturers DO pay attention to temperature. But hey, > I'm an optimist. I suspect the origin and destination are probably promised some form of temperature control, but I suspect in actuality so long as the beer doesn't freeze and explode the shipper doesn't care a whit. > > > 1. By having a binary choice, you leave your experiment open to > > > inconsistencies in rating one beer over another. > > > > Explain. I'd never be having someone compare two different beers, > > just two like beers with different temperature life-cycles. > > Right. But, what happens when 1a does not repeatedly = 1b for a > particular taster? It's the extent of the repeatability that I want to know. If the testers are right 50% of the time then I'll have to say it makes no difference. If they're right a statistically significant percentage of the time greater than 50, then it apparently does makes a difference. > > > 2. Tasters will probably say different more times than not - an > > > inherent testing bias (i.e. if this is a test, they must be > > > different). > > > > I was thinking of telling them in advance that 50% of the time > > they'll be the same, but I don't know if that's good or bad policy. > > I think that's called bias. Bias is always bad. However, a clear > statement of the possible treatments they could encounter should > alleviate this. But it's still a form of bias that must be > acknowledged. Definitely. I just think you're exactly right that with no prior information people would say 'different' more often than they say 'same', and I was trying to come up with some way to curb that in general without affecting any one trial more than any other. > > > 3. Reconsider having tasters rate the beer on a series of qualities > > > (color, bitterness, smoothness, etc). This helps to avoid #1 and 2 > > > above, and provides more information for your experiment. This is > > > what's typically done in taste tests (for example, a recent bitterness > > > study first grouped tasters into 3 groups (super tasters, tasters, > > > non-tasters) and then had us rate several characteristics of the food, > > > not just: is the bitterness between these two samples the same?) > > > > I don't see how that improves either. I'm the first to admit I > > don't know shit about putting this sort of thing together, but I > > don't want data on color, bitterness, smoothness, etc. I understand > > that if temperature life-cycle really does make no difference then > > all that data will, with enough samples, be expected to match up, > > but if I'm not interested in the nature or magnitude of the > > differences -- only if one exists at all -- why collect it and > > inject more noise? > > You are right, this does add more data. It doesn't necessarily add > noise (well, yes it does, when you go from a binary system to a scaling > system). I know you don't want data on these factors, you just want to > know whether temperature makes for different beers. But as a scientist, > I always want to design experiments that can do more than just discover > if variable X really matters. I'm interested in bigger pictures. So > yes, you can use a simple design to discover if temperature makes for > different beers, but in the end you are unable to answer the ubiquitous > scientific question: So what? Right, whereas all I want to get from this is the ability to at a party say (in a snooty voice), "Actually, you're wrong; it doesn't matter at all." if indeed that's the case. What's more, I know whatever small amount of statistical knowledge I once had has atrophied to the point where I can barely determine "statistically significant" for a given number of trials with an expected no-correlation probability of 0.5, and I know I couldn't handle much more than that analysis-wise without pestering people or re-reading books I didn't like the first time. > > > Eric's boss started life selling equipment to beer makers in > > > England. I will nag Eric to ask him about the temperature issues. > > > > Excellent, thanks. I think that transportation period is the real > > culprit. I don't doubt they're _very_ careful about temperature > > during the brewing, but I can't imagine the trans-Atlantic cargo > > people care much at all. I know there exist recording devices which > > can be included in shipments which sample temperature and other > > environmental numbers and record them for later display vs. time, > > but I wouldn't imagine the beer importers use anything like that > > routinely. > > Why not? Certainly not cheap beers, but higher quality imports might, > no? Again, the optimist. And once some movers promised me that furniture would arrive undamaged due to the great care their contentious employees demonstrate...

Jenni's research turned up this reply:

Temperature, schmemperature. According to Mad Dog Dave (Eric's boss), manufacturers rarely worry about temperature, at nearly any stage of the process. From brewing to bottling, transportation to storage, they really could care less. So despite my best effort at optimisim, pessimism flattens all.

SwarmStream Public Edition

My latest project for Onion Networks has just been released: it's a first beta release of SwarmStream Public Edition, a completely free Java protocol handler plug-in that transparently augments any HTTP data transfer with caching, automatic fail-over, automatic resume, and wide-area file transfer acceleration.

SwarmStream Public Edition is a scaled-down version of our commercially-licensable SwarmStream SDK. Both systems are designed to provide networked applications with high levels of reliability and performance by combining commodity servers and cheap bandwidth with intelligent networking software.

Using SwarmStream Public Edition couldn't be easier, you just set a property that adds its package as a Java protocol handler like so:

java -Djava.protocol.handler.pkgs=com.onionnetworks.sspe.protocol you.main.Class

So, if you're doing any sort of HTTP data transfer in your Java application, there is no reason not to download SSPE and try it out with your application. There are no code changes required at all.

Who Wins 'Click Here'

The w3c, the nominal leader of web standards, has a recommendation against using click here or here as the text for links on web pages. In addition to the good reasons they provide, there's google to consider. Google assigns page rank to web sites based on, among a great many other things, the text used in links to that page. When you link to https://ry4an.org/ with ry4an as the link text I get more closely associated with the term ry4an in google's rankings. However, when you link to a page using generic link text, such as here or click here you're not really helping anyone to find anything any easier.

That said I wondered who was winning the battles for google's here and click here turf. To find out I took the top entries for each and then googled them with both click here and here. Here's the resulting table.

| Phrase | Here | "Click Here" | ||

| Measure | Rank | Hits | Rank | Hits |

| Adobe | 1 | 14,300,000 | 1 | 11,000,000 |

| QuickTime | 2 | 3,560,000 | 2 | 1,830,000 |

| "Real Player" | 3 | 1,720,000 | 4 | 900,000 |

| "Internet Explorer" | 4 | 13,500,000 | 3 | 7,940,000 |

| MapQuest | 5 | 1,970,000 | 5 | 1,130,000 |

| ShockWave | 7 | 1,240,000 | 6 | 564,000 |

So, what can we conclude from these numbers? Nothing at all. They provide only an aggregate of popularity, web design savvy, and a bunch of other unidentified factors. But, there they are just the same.

Better Random Subject Lines

Earlier I talked about generating random Subject lines for emails. I settled on something that looked like Subject: Your email (1024) . Those were fine, but got dull quickly. By switching the procmail rules to look like:

:0 fhw * ^Subject:[\ ]*$ |formail -i "Subject: RANDOM: $(fortune -n 65 -s | perl -pe 's/\s+/ /g')" :0 fhw * !^Subject: |formail -i "Subject: RANDOM: $(fortune -n 65 -s | perl -pe 's/\s+/ /g')"

I'm now able to get random subject lines with a little more meat to them. They come out looking like: RANDOM: The coast was clear. -- Lope de Vega

However, given that the default fortune data files only provide 3371 sayings that are 65 characters or under the Birthday_paradox will cause a subject collision a lot sooner than with the 2:superscript:15 possible subject lines I had before.

Update: It's been a few weeks since I've had this in place and my principle subject-less correspondent has noticed how eerily often the random subject lines match the topic of the email.

Jetty with Large File Support

Jetty is a great Java servlet container and web server. It's fully embeddable and at OnionNetworks we've used it in many of our products. It, however, has the same 2GiB file size limit that a lot of software does. This limit comes from using a 32 bit wide value to store file size yeilding a 4GiB (unsigned) or 2GiB (signed) maximum, and represents a real design gaff on the part of the developers.

Here at OnionNetworks we needed that limit eliminated so last year I twiddled the fields and modified the accessors wherever necessary. After initially offering a fix and eventually posting the fix it looks like Greg is getting ready to include it thanks in part to external pressure. Now if only Sun would fix the root of the problem.

Tags

- funny

- java

- people

- python

- mongodb

- scala

- perl

- meta

- mercurial

- home

- security

- ideas-built

- ideas-unbuilt

- software

Contact

Content License

This work is licensed under a

Creative Commons Attribution-NonCommercial 3.0 Generic License.