Tag: ideas-built (Atom feed)

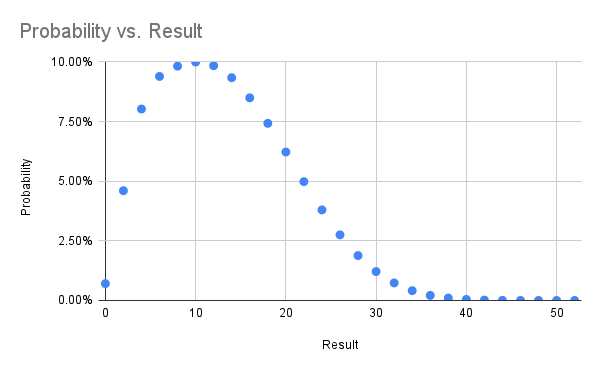

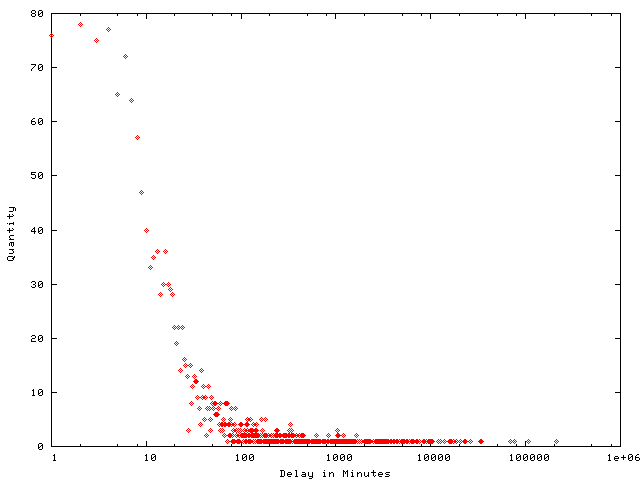

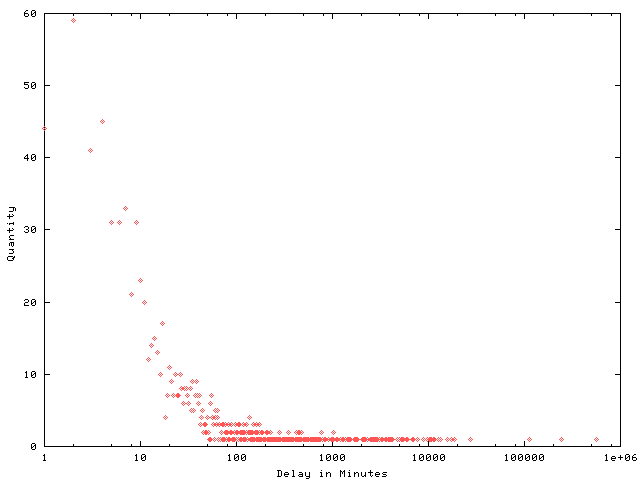

Outcome Probability for One Handed Solitaire

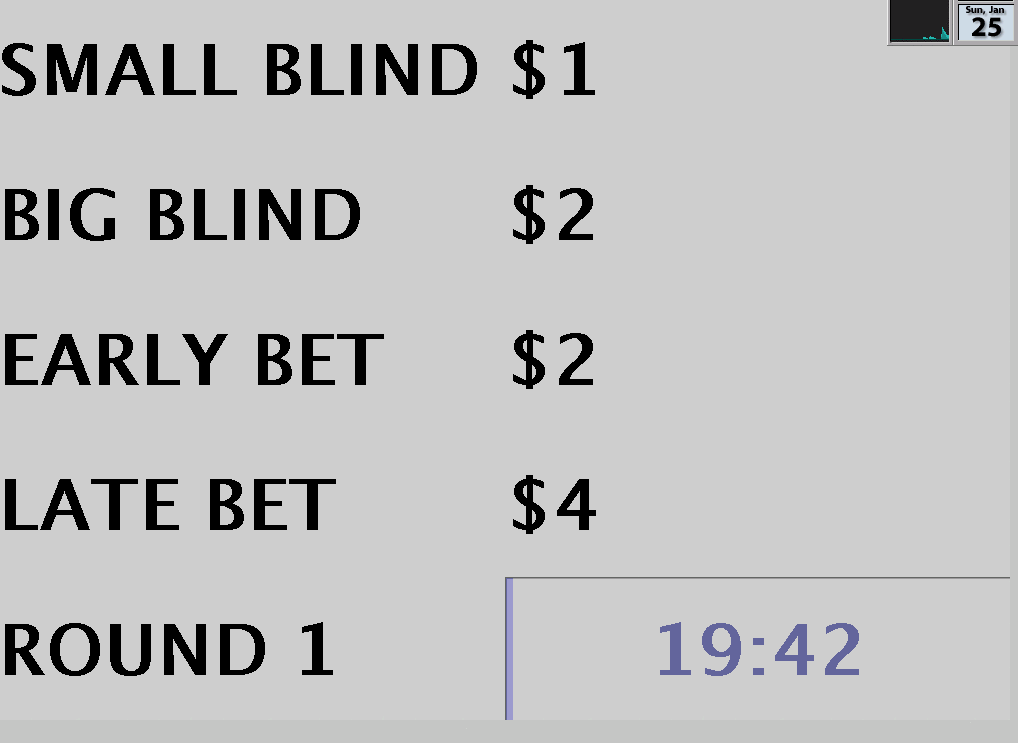

Back in 1994 my circle of high school friends spent a lot of time sitting around talking (there were no cell phones) and for about a week we were all playing one handed solitaire. In suburban St. Louis we called it idiot's delight solitaire (which turns out to be an entirely different game), because there is absolutely no human input after the shuffle. As soon as you've started playing it's already determined whether you've won -- you just spend five minutes learning if you did.

Naturally we wondered how likely our very rare wins were, and being a computer nerd back then too I wrote a Pascal(!) program to simulate the game and arrived at the conclusion you win one in every 142 games.

Now thirty years later I've taught the game to the eleven year old in our home, who is just game back from a phone-free summer camp with a deck of cards and dubious shuffling skills.

My old Pascal is lost to bitrot, but the game as python, using a sort of janky off the shelf deck_of_cards module is trivial:

def play():

deck = deck_of_cards.DeckOfCards()

hand = []

while not deck._deck_empty():

hand.append(deck.give_random_card())

while len(hand) > 3:

if hand[-1].suit == hand[-4].suit:

del hand[-3:-1]

elif hand[-1].rank == hand[-4].rank:

del hand[-4:]

else:

break

return len(hand)

On the 486 I was running at the time I recall getting ten thousand or so runs over many days. On a tiny Linode in the modern era I got 50 million runs in 15ish hours.

I've gathered the results in a spreadsheet and included some probability graphs below. In trying to find the real name of this game I came across a previous analysis from 2014 which comes to the same overall probability. That blog post is now down (hence the archive.org links) with ominous messages telling folks the author is definitely no longer thinking about this game.

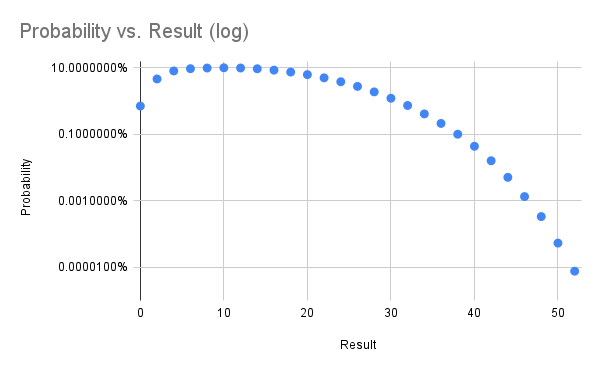

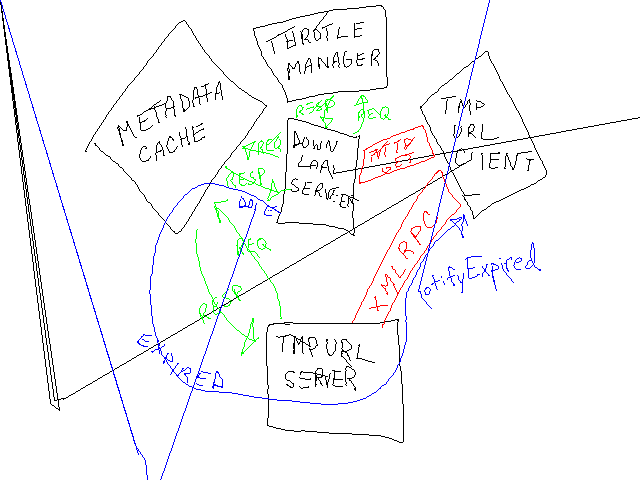

A Gamebook Report with Graphviz, Google Sheets, Python, and Juypter/Colab

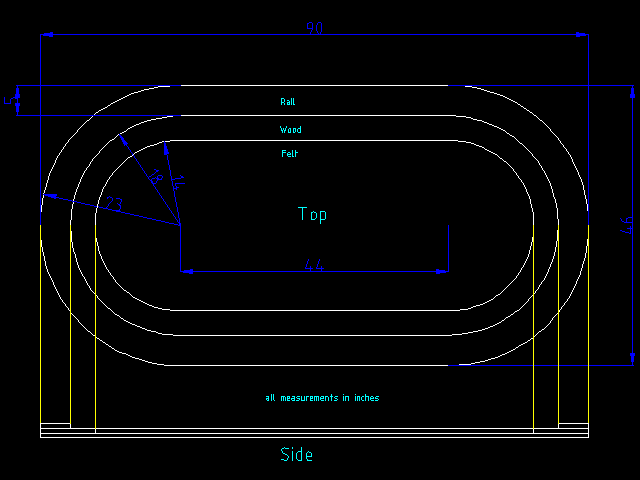

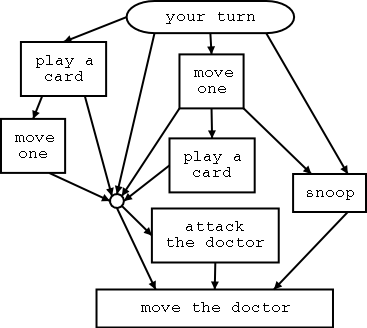

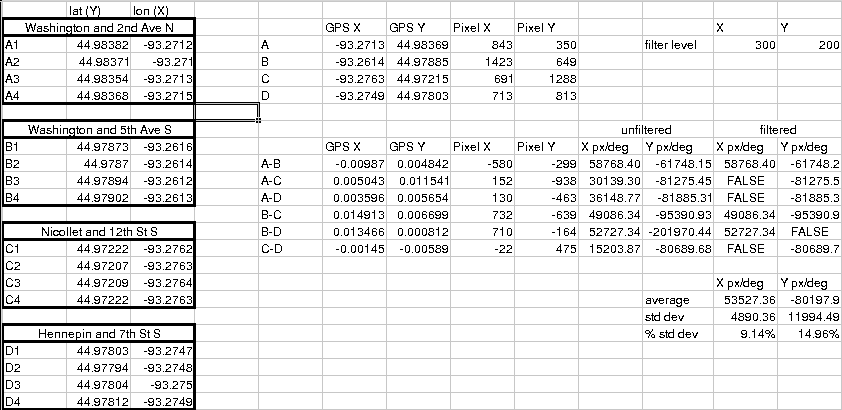

An 11 year old in our house needed to do a book report for school in the form of a board game and selected a gamebook, apparently the generic name for the trademarked Choose Your Own Adventure books. The non-linear narrative made the choice of board layout easy -- just use the graph of pages-transitions ("Turn to page 110").

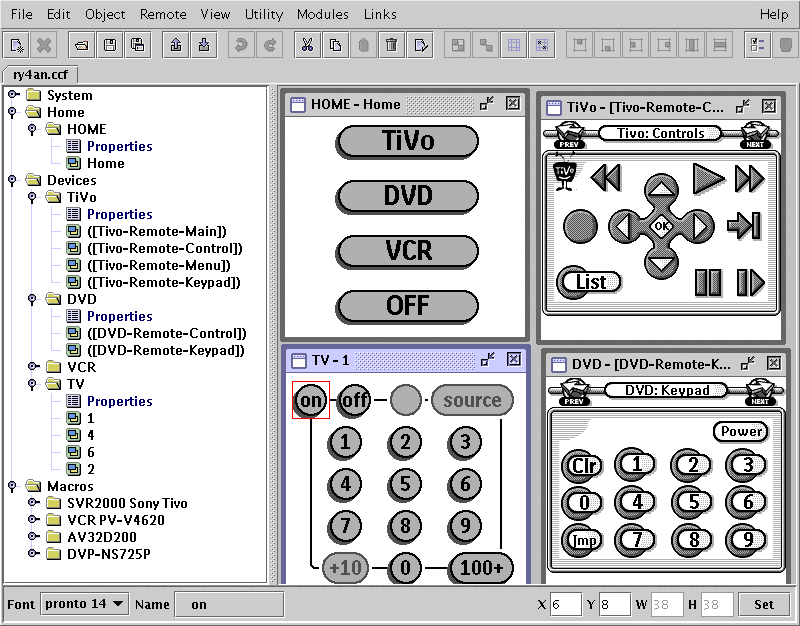

The graphviz library is always my first choice when I want to visualize nodes and edges, and the python graphviz module provides a convenient way to get data into a renderable graph structure.

I wanted to work with the 11 year old as much as possible, so I picked a programming environment that can be used anywhere, jupyter notebooks, and we ran it in Google's free hosted version called colab.

The data entry was going to be the most time consuming part of the project, and something we wanted to be able to work on both together and apart. For that I picked a Google sheet. It gave us the right mix of ease of entry, remote collaboration, and a familiar interface. Python can read from Google sheets directly using the gspread module, saving a transcription/import step.

It took us a few weeks of evenings to enter the book's info into the data spreadsheet. The two types of data we needed were places, essentially nodes, and decisions, which are edges. For every place we recorded starting page, a description of what happens, and the page where you next make a decision or reach an ending. For every decision we recorded the page where you were deciding, a description of the choice, and the page to which you'd go next. As you can see in the data spreadsheet that was 139 places/nodes and 177 decisions/edges.

Once we'd entered all the data we were able to run a short python program to load the data from the spreadsheet, transform it into a graph object, and then render that graph as a pdf file. That we printed with a large format printer, and then the 11 year old layered on art, puzzles, rules, and everything else that turns a digraph into a playable game. The final game board is shown below, with a zoomed section in the album.

One interesting thing about this particular book that was only evident once the full graph was in front of us was that the very first choice in the book splits you into one of two trees that never reconnect. Lots of later choices in the book loop back and cross over, but that first choice splits you into one of two separate books.

I've omitted the title and author info from the book to stop this giant spoiler from showing up on google searches, but the 11 year old assures me it was a good, fun read and recommends it.

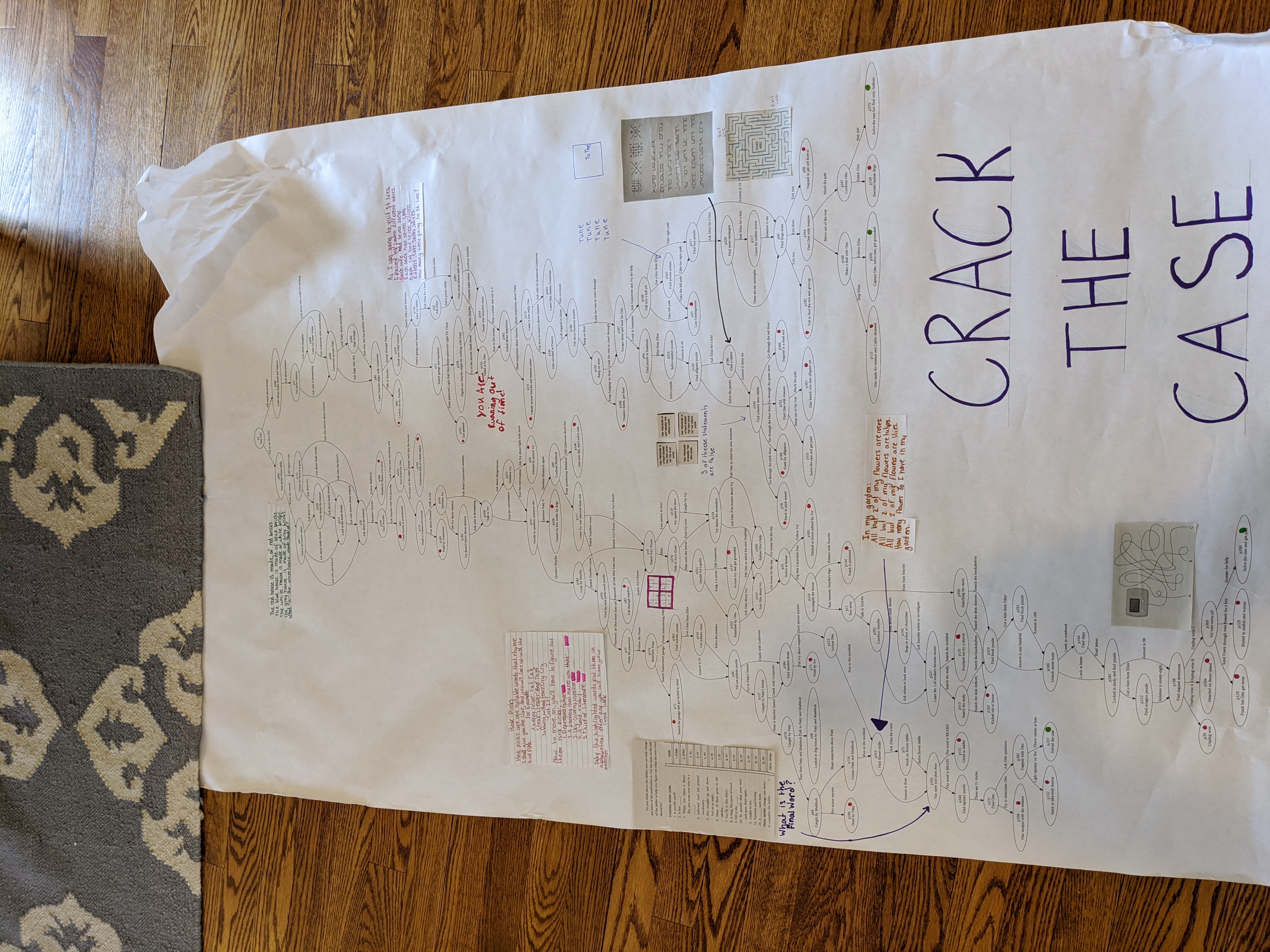

Kindle Highlights and Ratings

When reading I've always underlined sentences that make me happy. Once the kids got old enough to understand there's no email or fun on a Kindle I switched from dead tree books, and now the underlining is stored in Amazon's datacenters.

After a few years of highlighting on Kindle I started to wonder if the number of sentences that I liked and the eventual five-star scale rating I gave a book had any correlation. Amazon owns Goodreads and Kindle services sync data into Goodreads, but unfortunately highlight data isn't available through any API.

I was able to put together a little Python to scrape the highlight counts per book (yay, BeautifulSoup) and combine it with page count and rating info from the goodreads APIs. Our family scientist explained "the statistical tests to compare values of a continuous variable across levels of an ordinal variable", and there was no meaningful relationship. Still it makes a nice picture:

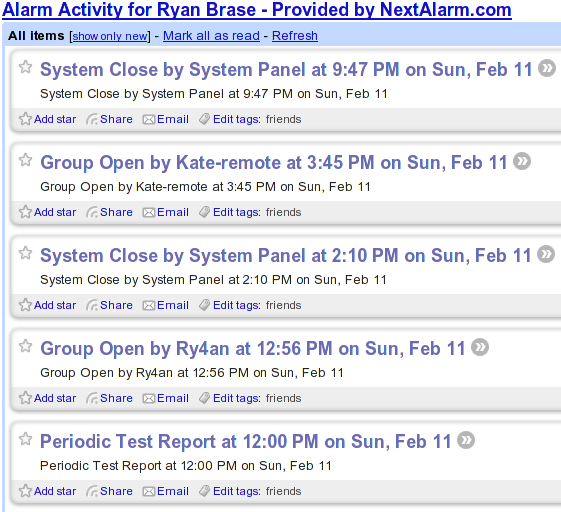

Home Alarm Analytics With AWS Kinesis

Home security system projects are fun because everything about them screams "1980s legacy hardware design". Nowhere else in the modern tech landscape does one program by typing in a three digit memory address and then entering byte values on a numeric keypad. There's no enter-key -- you fill the memory address. There's no display -- just eight LEDs that will show you a byte at a time, and you hope it's the address you think it is. Arduinos and the like are great for hobby fun, but these are real working systems whose core configuration you enter byte by byte.

The feature set reveals 30 years of crazy product requirements. You can just picture the well-meaning sales person who sold a non-existent feature to a huge potential customer, resulting in the boolean setting that lives at address 017 bit 4 and whose description in the manual is:

ON: The double hit feature will be enabled. Two violations of the same zone within the Cross Zone Timer will be considered a valid Police Code or Cross Zone Event. The system will report the event and log it to the event buffer. OFF: Two alarms from the same zone is not a valid Police Code or Cross Zone Event

I've built out alarm systems for three different homes now, and while occasionally frustrating it's always a satisfying project. This most recent time I wanted an event log larger than the 512 events I can view a byte at a time. The central dispatch service I use will sell me back my event log in a horrid web interface, but I wanted something programmatically accessible and ideally including constant status.

The hardware side of the solution came in the form of the Alarm Decoder from Nu Tech. It translates alarm panel keypad bus events into events on an RS-232 serial bus. That I'm feeding into a Raspberry Pi. From there the alarmdecoder package on PyPI lets me get at decoded events as Python objects. But, I wanted those in a real datastore.

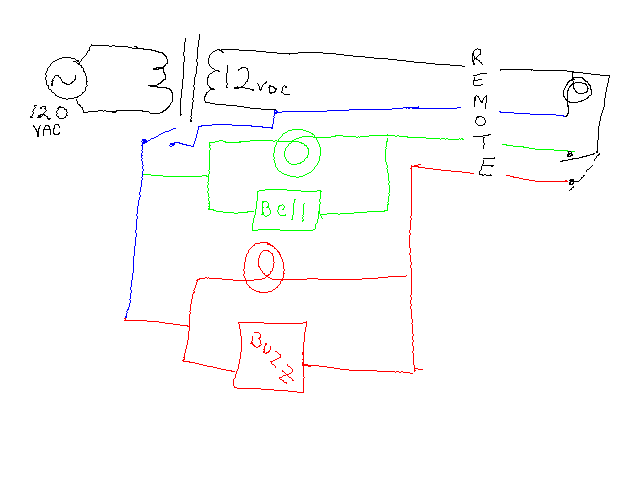

Raspberry Pi UPS

I'm starting to do more on a raspberry pi I've got in the house, and I wanted it to survive short power outages. I looked at buying an off the shelf Uninteruptable Power Supply (UPS), but it just struck me as silly that I'd be using my house's 120V AC to power to fill a 12V DC battery to be run through an inverter into 120V AC again to be run through a transformer into DC yet again. When the house is out of power that seemed like a lot of waste.

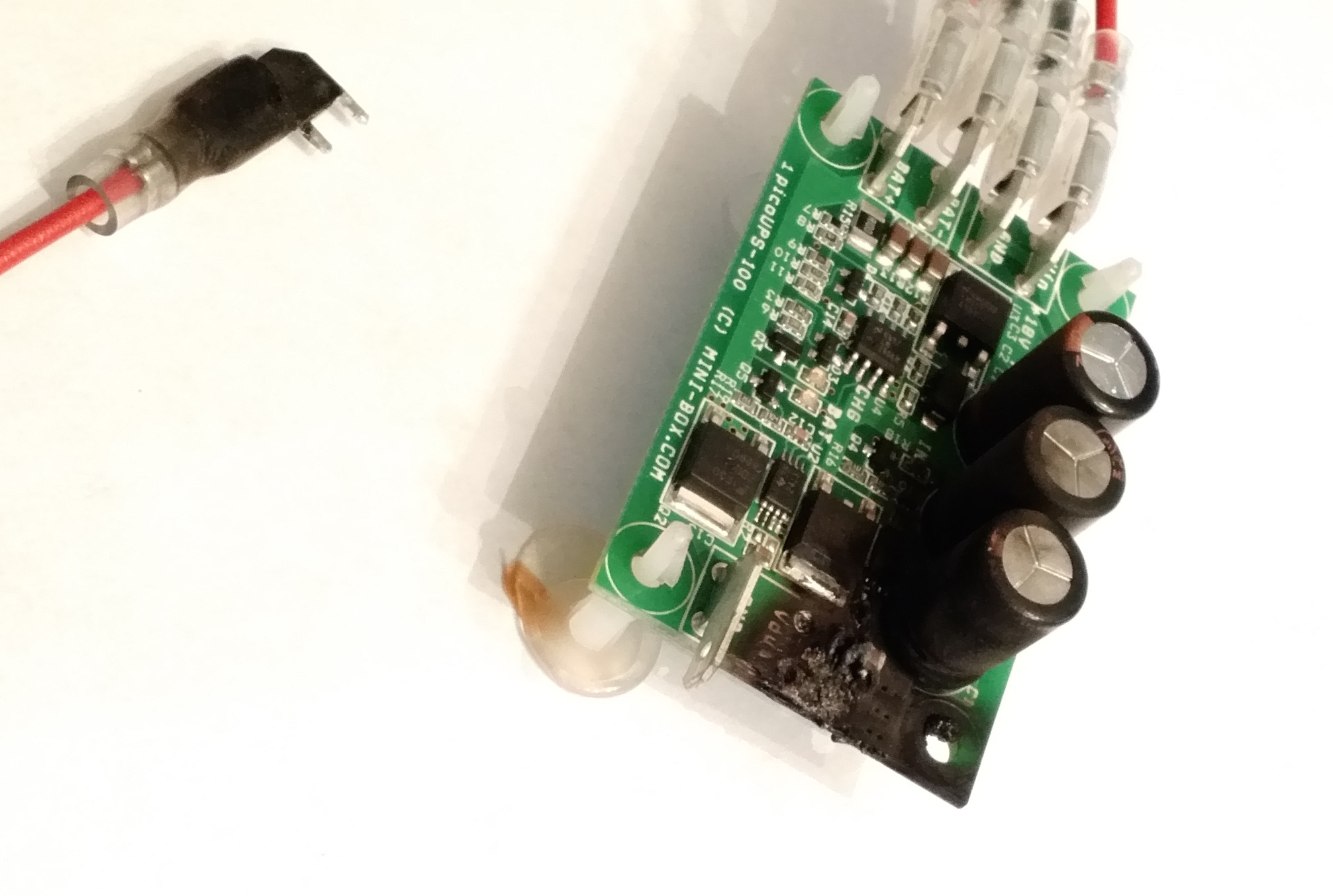

A little searching turned up the PicoUPS-100 UPS controller. It seems like it's mostly used in car applications, but it has two DC inputs and one DC output and handles the charging and fast switching. The non-battery input needs to be greater than the desired 12 volts, so I ebayed a 15v power supply from an old laptop. I added a voltage regulator and buck converter to get solid 12v (router) and 5v (rpi) outputs. Then it caught on fire:

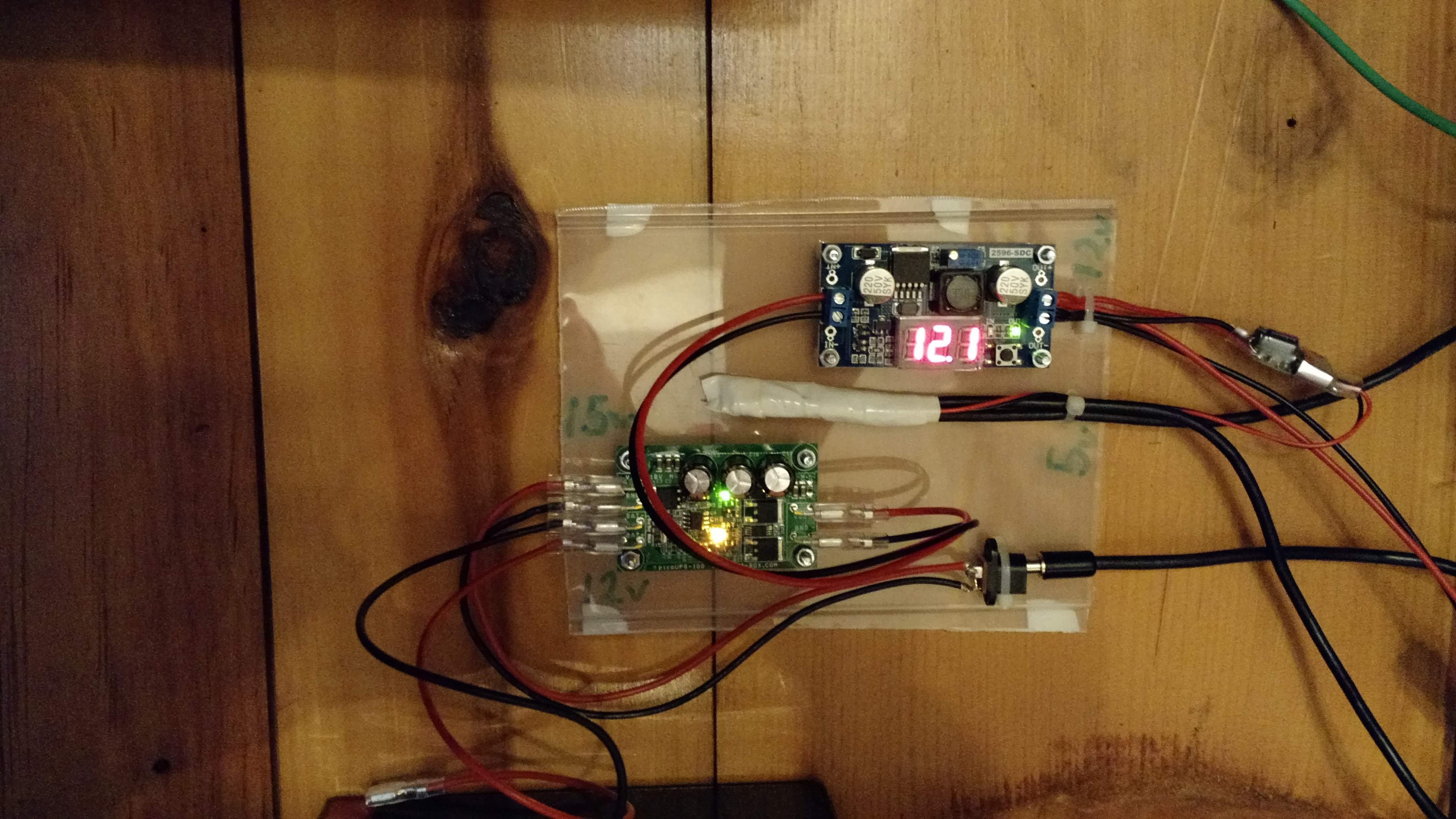

But I re-bought the charred parts, and the second time it worked just fine:

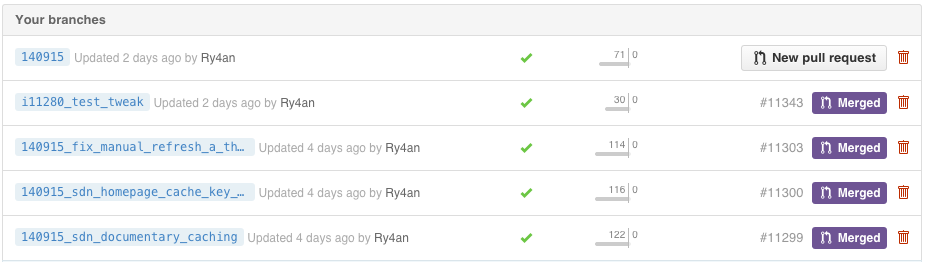

Pylint To Github

I spent a few hours trying to get the Jenkins Git & Github plugins to:

- run pylint on all remote branch heads that:

- arent' too old

- haven't already had pylint run on them

- send the repo status back to GitHub

I'm sure it's possible, but the Jenkins Git plugin doesn't like a single build to operate on multiple revisions. The repo statuses weren't posting, the wrong branches were getting built, and it was easier to write a quick script.

Now whenever someone pushes code at DramaFever pylint does its thing, and their most recent commit gets a green checkmark or a red cross. If/when they open a PR the status is already ready on the PR and warns folks not to merge it if pylint is going to fail the build. They can keep heaping on commits until the PR goes green.

I run it from Jenkins triggerd by a GitHub push hook, but it's setup so that even running it from cron on the minute is safe for those without a CI server yet.

Bitcoin Conversion In Google Spreadsheets

I've been using Charlie Lee's excellent Google Spreadsheet Bitcoin tracker sheet for awhile but it pulls data from a single exchange at a time and relies on the ordering of those exchanges on the bitcoinwatch.com site, which vary with volume.

I figured out I could get better numbers more reliably from bitcoinaverage.com, which (predictably) averages multiple exchanges over various time periods. They offer a great JSON API, but unfortunately Google spreadsheets only export JSON -- they don't have a function for importing it.

None the less I was able to fake it using a regex. You can pull the 24 hour average price in with this forumla:

=regexextract(index(importdata("https://api.bitcoinaverage.com/ticker/USD"),2,1), ": (.*),")+0

If you want that to update live (not just when you open the spreadsheet) you need to use Charlie's hack to get the sheet to think the formula depends on live stock data:

=regexextract(index(importdata("https://api.bitcoinaverage.com/ticker/USD?workaround="&INT(

NOW()*1E3)&REPT(GoogleFinance("GOOG");0)),2,1), ": (.*),")+0

I've put together a sample spreadsheet based on Charlie's.

Occuped: Twine + Go + App Engine

In our NY office We've got 40 people working in a space with two bathrooms. Walking to the bathrooms, finding them both occupied, and grabbing a snack instead is a regular occurrence. For a lark I took a Twine with the breakout board and a few magnetic switches and connected them to the over taxed bathroom doors.

The good folks at Twine will invoke a web hook on state change, so I created a tiny webapp in Go that takes the GET from Twine and stashes it in the App Engine datastore. I wrote a cheesy web front end to show the current state based on the most recent change. It also exposes a JSON API, allowing my excellent coworkers to build a native OS X menulet and a much nicer web version.

Crossed Lamps

Last weekend we bought two Rodd lamps at Ikea for the guest room, and it struck me how amused I'd be if each one switched the other. Six hours and a few new parts later, and it came out pretty well:

The remote action is especially jarring because the switches are right next to the bulbs they would normally control:

Amazon S3 as Append Only Datastore

As a hack, when I need an append-only datastore with no authentication or validation, I use Amazon S3. S3 is usually a read-only service from the unauthenticated web client's point of view, but if you enable access logging to a bucket you get full-query-parameter URLs recorded in a text file for GETs that can come from a form's action or via XHR.

There aren't a lot of internet-safe append-only datastores out there. All my favorite noSQL solutions divide permissions into read and/or write, where write includes delete. SQL databases let you grant an account insert without update or delete, but still none suggest letting them listen on a port that's open to the world.

This is a bummer because there are plenty of use cases when you want unauthenticated client-side code to add entries to a datastore, but not read or modify them: analytics gathering, polls, guest books, etc. Instead you end up with a bit of server side code that does little more than relay the insert to the datastore using over-privileged authentication credentials that you couldn't put in the client.

To play with this, first, create a file named vote.json in a bucket with contents like {"recorded": true}, make it world readable, set Cache-Control to max-age=0,no-cache and Content-Type to application/json. Now when a browser does a GET to that file's https URL, which looks like a real API endpoint, there's a record in the bucket's log that looks something like:

aaceee29e646cc912a0c2052aaceee29e646cc912a0c2052aaceee29e646cc91 bucketname [31/Jan/2013:18:37:13 +0000] 96.126.104.189 - 289335FAF3AD11B1 REST.GET.OBJECT vote.json "GET /bucketname/vote.json?arg=val&arg2=val2 HTTP/1.1" 200 - 12 12 9 8 "-" "lwp-request/6.03 libwww-perl/6.03" -

The full format is described by Amazon, but with client IP and user agent you have enough data for basic ballot box stuffing detection, and you can parse and tally the query arguments with two lines of your favorite scripting language.

This scheme is especially great for analytics gathering because and one never has to worry about full log on disks, load balancers, backed up queues, or unresponsive data collection servers. When you're ready to process the data it's already on S3 near EC2, AWS Data Pipeline or Elastic MapReduce. Plus, S3 has better uptime than anything else Amazon offers, so even if your app is down you're probably recording the failed usage attempts.

Creating Burn Down Charts for GitHub Repositories Using Google Apps Script

At DramaFever I got folks to buy into to burn down charts as the daily display for our weekly sprints with the rotating release person being responsible for updating a Google spreadsheet with each day's end-of-day open and closed issue counts.

It works fine, and it's only a small time burden, but if one forgets there's no good way to get the previous day's counts. Naturally I looked to automation, and GitHub has an excellent API. My usual take on something like this would be to have cron trigger a script that:

- polls the GitHub API

- extracts the counts

- writes them to some data store

- builds today's chart from the historical data in the datastore

That could be easily done in bash, Python, or Perl, but it's the sort of thing that's inevitably brittle. It's too ad hoc to run on a prod server, so it'll run from a dev shell box, which will be down, or disk full, or rebuilt w/o cron migration or any of the million other ailments that befall non-prod servers.

Looking for something different I tried Google Apps Script for the first time, and it came out very well.

GitHub Jenkins Deploy Keys Config

GitHub doesn't let you use the same deploy key for multiple repositories within a single organziation, so you have to either (a) manage multiple keys, (b) create a non-human user (boo!), or (c) use their not-yet-ready for primetime HTTP OAUTH deploy access, which can't create read-only permissions.

In the past to managee the multiple keys I've either (a) used ssh-agent or (b) specified which private key to use for each request using -i on the command line, but neither of those are convenient with Jenkins.

Today I finally thought about it a little harder and figured out I could use fake hostnames that map to the right keys within the .ssh/config file for the Jenkins user. To make it work I put stanzas like this in the config file:

Host repo_name.github.com

Hostname github.com

HostKeyAlias github.com

IdentityFile ~jenkins/keys/repo_name_deploy

Then in the Jenkins GitHub Plugin config I set the repository URL as:

git@repo_name.github.com:ry4an/repo_name.git

There is no host repo_name.github.com and it wouldn't resolve in DNS, but that's okay because the .ssh/config tells ssh to actually go to github.com, but we do get the right key.

Maybe this is obvious and everyone's doing it, but I found it the least-hassle way to maintain the accounts-for-people-only rule along with the separate-keys-for-separate-repos rule and without running the ssh-agent daemon for a non-login shell.

spdyproxy on Ubuntu 12.4 LTS

I'm often on unencrypted wireless networks, and I don't always trust everyone on the encrypted ones, so I routinely run a SOCKS proxy to tunnel my web traffic through an encrypted SSH tunnel. This works great, but I have to start the SSH tunnel before I start browsing -- that's okay IRC before reader -- but when I sleep the laptop the SSH tunnel dies and requires a restart before I can browse again. In the past I've used autossh to automate that reconnect, but it still requires more attention than it deserves.

So I was excited when I saw Ilya Grigorik's writeup on spdyproxy. With SPDY multiple independent bi-directional connections are multiplexed through a single TCP connection, in the same way some of us tried to do with web-mux in the late 90s (I've got a Java 1.1 implementation somwhere). SPDY connections can be encrypted, so when making a SPDY connection to a HTTP proxy you're getting an encrypted tunnel though which your HTTP connections to anywhere can be tunneled, and probably getting a TCP setup/teardown speed boost as well. Ilya's excellent node-spdyproxy handles the server side of that setup admirably and the rest of this writeup covers my getting it running (with production accouterments) on an Ubuntu 12.4(.1) LTS system.

With the below setup in place I can use a proxy.pac to make sure my browser never sends an unencrypted byte through whatever network I'm not -- DNS looksups excluded, you still needs SOCKSv4a or SOCKSv5 to hide those.

Getting Chef Solo Working With the Database Cookbook and Vagrant

This is going to be one big jargon laden blob if you're not trying to do exactly what I was trying to do this week, but hopefully it turns up in a search for the next person.

I'm setting up a new development environment using Vagrant and I'm using Chef Solo to provision the Ubuntu (12.4 Precise Penguin) guest. I wanted MySQL server running and wanted to use the database cookbook from Opscode to pre-create a database.

My run list looked like:

recipe[mysql::server], recipe[webapp]

Where the webapp recipe's metadata.rb included:

depends "database"

and the default recipe led off with:

mysql_database 'master' do connection my_connection action :create end

Which would blow up at execution time with the message "missing gem 'mysql'", which was really frustrating because the gem was already installed in the previous mysql::server recipe.

I learned about chef_gem as compared to gem_package from the chef docs, and found another page the showed how to run actions at compile time instead of during chef processing, but only when I did both like this:

%w{mysql-client libmysqlclient-dev make}.each do |pack|

package pack do

action :nothing

end.run_action(:install)

end

g = chef_gem "mysql" do

action :nothing

end

g.run_action(:install)

was I able to get things working. Notice that I had to install the OS packages for mysql, the mysql development headers, and the venerable make because I could early install the mysql ruby gem into chef's gem path -- which with Vagrant's chef solo provisioner is entirely separate from any system gems.

Low Flow Shower Delay

When I start up the shower it's the wrong temperature and adjusting it to the right temperature takes longer in this apartment than it has in any home in which I've previously lived. I wanted to blame the problem on the low flow shower head, but I'm having a hard time doing it. My thinking was that the time delay from when I adjust the shower to when I actually feel the change is unusually high due to the shower head's reduced flow rate.

In setting out to measure that delay I first found the flow rate in liters per second for the shower. I did this three times using a bucket and a stopwatch finding the shower filled 1.5 qt in 12 s, or 1.875 gpm, or 0.118 liters per second. A low flow shower head, according to the US EPA's WaterSense program, is under 2.0 gpm, so that's right on target.

That let me know how quickly the "wrong temperature" water leaves the pipe, so next to see how much of it there is. From the hot-cold mixer to the shower head there's 65 inches of nominal 1/2" pipe, which has an inner diameter of 0.545 inches. The volume of a cylinder (I'm ignoring the curve of the shower arm) is just pi times radius squared times length:

Converting to normal units gives 1.3843 centimeters diameter and 165.1 centimeters length, which yeilds 248 cm2, or 0.248 liters, of wrong temperature water to wait through until I get to sample the new mix.

With a flow rate of 0.118 liters per second and 0.248 liters of unpleasant water I should be feeling the new mix in 2.1 seconds. I recognize that time drags when you're being scalded, but it still feels like longer than that.

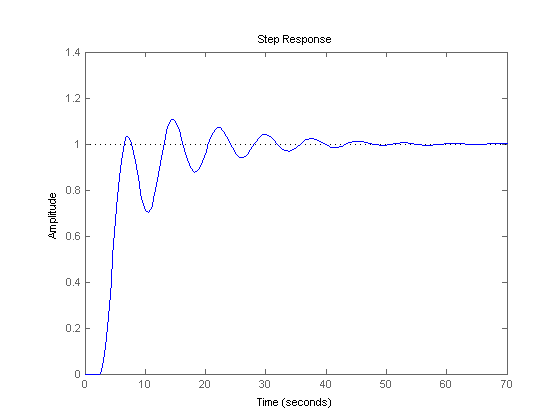

I've done some casual reading about linear shift time delays in feedback-based control systems and the oscillating converge they show certainly aligns with the too-hot then too-cold feel of getting this shower right. This graph is swiped from MathWorks and shows a closed loop step response with a 2.5 second delay:

That shows about 40 seconds to finally home-in on the right temperature and doesn't include a failure-prone, over-correcting human. I'm still not convinced the delay is the entirety of the problem, but it does seem to be a contributing factor. Some other factors that may affect perception of how show this shower is to get right are:

- non-linearity in the mixer

- water in the shower head itself

- water already "in flight" having left the head but not yet having hit me

Mercifully, the water temperature is consistent -- once you get it dialed in correctly it stays correct, even though a long shower.

I guess the next step is to get out a thermometer and both try to characterize the linearity of the mixing control and to try to measure rate of change in the temperature as related to the magnitude of the adjustment.

Update: I got a ChemE friend to weigh in with some help.

OS X Linux Clipboard Sharing

My primary home machine is a Linux deskop, and my primary work machine is an OSX laptop. I do most of my work on the Linux box, ssh-ed into the OS X machine -- I recognize that's the reverse of usual setups, but I love the awesome window manager and the copy-on-select X Window selection scheme.

My frustration is in having separate copy and paste buffers across the two systems. If I select something in a work email, I often want to paste it into the Linux machine. Similarly if I copy an error from a Linux console I need to paste it into a work email.

There are a lot of ways to unify clipboards across machines, but they're all either full-scale mouse and keyboard sharing, single-platform, or GUI tools.

Finding the excellent xsel tool, I cooked up some command lines that would let me shuttle strings between the Linux selection buffer and the OS X system via ssh.

I put them into the Lua script that is the shortcut configuration for awesome and now I can move selections back and forth. I also added some shortcuts for moving text between the Linux selection (copy-on-select) and clipboard (copy-on-keypress) clipboard.

-- Used to shuttle selection to/from mac clipboard

select_to_mac = "bash -c '/usr/bin/xsel --output | ssh mac pbcopy'"

mac_to_select = "bash -c 'ssh mac pbpaste | /usr/bin/xsel --input'"

-- Used to shuttle between selection and clipboard

select_to_clip = "bash -c '/usr/bin/xsel --output | /usr/bin/xsel --input --clipboard'"

clip_to_select = "bash -c '/usr/bin/xsel --output --clipboard | /usr/bin/xsel --input'"

awful.key({ modkey, }, "c", function () awful.util.spawn(mac_to_select) end),

awful.key({ modkey, }, "v", function () awful.util.spawn(select_to_mac) end),

awful.key({ modkey, "Shift" }, "c", function () awful.util.spawn(clip_to_select) end),

awful.key({ modkey, "Shift" }, "v", function () awful.util.spawn(select_to_clip) end),

NFC PayPass Rick Roll

NFC tags are tiny wireless storage devices, with very thin antennas, attached to poker chip sized stickers. They're sort of like RFID tags, but they only have a 1 inch range, come in various capacities, and can be easily rewritten. If the next iPhone adds a NFC reader I think they'll be huge. As it is they're already pretty fun and only a buck each even when bought in small quantities.

Marketers haven't figured it out yet, but no one wants to scan QR barcodes. The hassle with QR codes is that you have to fire up a special barcode reader application and then carefully focus on the barcode with your phone's camera. NFC tags have neither of those problems. If your phone is awake it's "looking for" NFC tags, and there's no aiming involved -- just tap your phone on the tag.

A NFC tag stores a small amount of data that's made available to your phone when tapped. It can't make you phone do anything your phone doesn't already do, and your phone shouldn't be willing to do anything destructive or irreversible (send money) without asking for your confirmation.

People are using NFC tags to turn on bluetooth when their phone is placed in a stickered cup-holder, to turn off their email notification checking when their phone is placed on their nightstand, and to bring up their calculator when their phone is placed on their desk. Most anything your phone can do can be triggered by a NFC tag.

NFC tags are already in use for transit passes, access keys, and payment systems. I can go to any of a number of businesses near my home and make a purchase by tapping my phone against the Master Card PayPass logo on the card scanner. My phone will then ask for a pin and ask me to confirm the purchase price which is deducted from my Google Wallet or charged to my Citi MasterCard.

I'm still batting around ideas for a first NFC project, maybe a geocaching / scavenger-hunt-like trail of tags with clues, but meanwhile I made some fake Master Card PayPass labels that are decidedly more fun:

Keep in mind that phone has absolutely no non-standard software or settings on it. Any NFC-reader equipped phone that touches that tag will be rick rolled. Now to get a few of those out on local merchants' existing credit card readers.

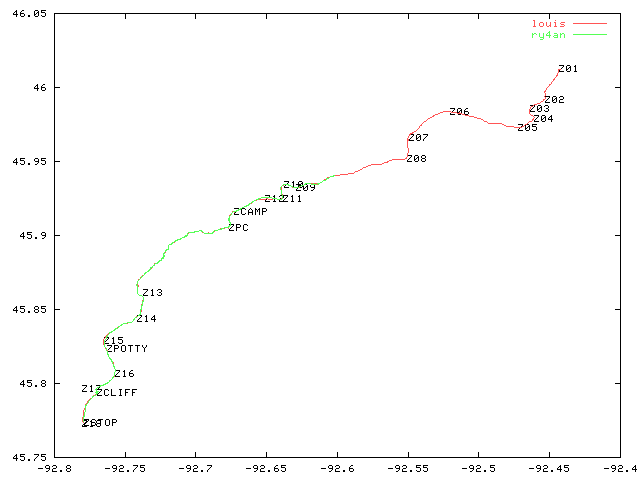

Mercurial Chart Extension

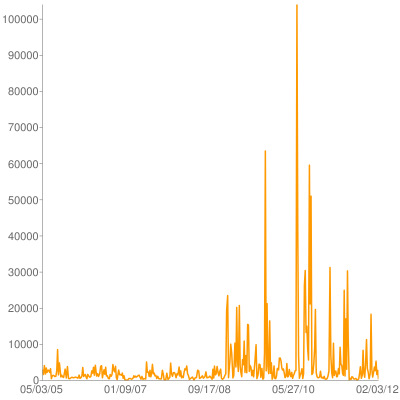

Back in 2008 I wote an extension for Mercurial to render activity charts like this one:

Yesterday I finally got around to updating it for modern Mercurial builds, including 2.1. It's posted on bitbucket and has a page on the Mercurial wiki. It uses pygooglechart as a wrapper around the excellent Google image chart API.

I really like the google image charts becuse the entire image is encapsulated as a URL, which means they work great with command line tools. A script can output a URL, my terminal can make it a link, and I can bring it up in a browser window w/o ever really using a GUI tool at all.

If I take any next step on this hg-chart-extension it will be to accept revsets for complex secifications of what changesets one wants graphed, but given that it took me two years to fix breakage that happened with version 1.4 that seems unlikely.

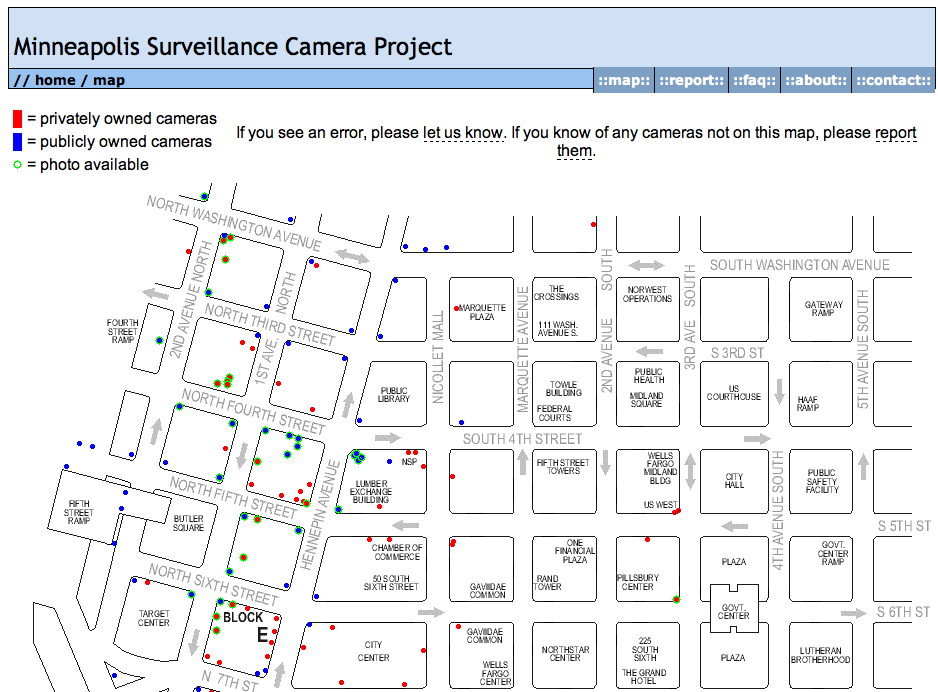

Minneapolis Surveillance Camera Project Shut Down

Now that I no longer live in Minneapolis it seems a fine time to shut down the Minneapolis Surveilance Camera Project I launched in 2003.

At peak it got mentioned in a few strib articles, was written about in the downtown journal, and got a lot of hits from computers within city and county government. After my initial inventory walk most of the camera additions came in via the website from strangers. More of the reports had photos of the cameras once everyone had a camera in their phone.

The whole idea of trying to inventory public and private cameras in public spaces seems silly now. One might as well start the Minneapolis Atom Inventory project -- they're just as plentiful and hard to spot.

If anyone would like the mpls-watched.org domain for any reason let me know. It expires in June and I'm happy to transfer it to someone with a use for it. The content still exists within archive.org.

Posthumous Key Revocation

I've just emptied out my safe deposit box for the move, and thought I'd re-post this:

If you hear I've died someone who knows their way around gpg should ask Kate for the CD pictured below. It'll be in a safe deposit box that's in my name, and she'll have access after my death. There's a key revocation certificate with reason 'death' on the CD and a printed ASCII-armored version too since the odds of us being able to read CDs in a few decades is approximately nil.

Miscellaneous Open Source Contributions

I'll take Mercurial over git any day for all the reasons obvious to anyone who's really used both of them, but geeyah github sure makes contributing to projects easy. At work we had a ten minute MongoDB upgrade downtime turn into two hours, and when we finally figured out what deprecated option was causing the daemon launch to abort, rather than grouse about it on Twitter (okay, I did that too) I was able to submit a one line patch without even cloning down the repository that got merged in.

On the more-substantial side I fixed some crash bugs in dircproxy. It had been running rock solid for me for a few years, but a recent libc upgrade that added some memory checking had it crashing a few times a day. Now (with the help of Nick Wormley) I was able to fix some (rather egregious) memory gaffs. I guess this is the oft trumpeted advantage of open source software in the first place -- I had software I counted on that stopped working and I was able to fix. Really though it was just fun to fire up gdb for the first time in ages.

Finally, I was able to take some hours at work and contribute a cookbook for chef to add the New Relic monitoring agent to our many ec2 instances. It may never see a single download, but it's nice to know that if someone wants to use chef to add their systems to the New Relic monitoring display they don't have to start from scratch.

I've been living in a largely open source computing environment for fifteen years, but the barrier to entry as minor contributor has never been so low.

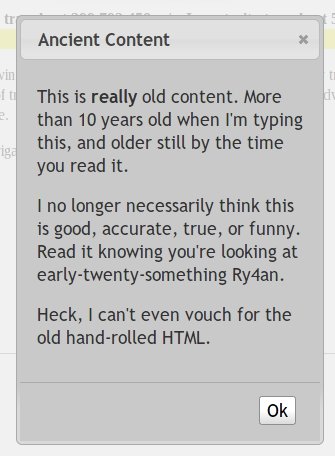

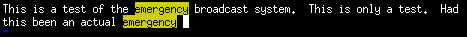

Ancient Content Warnings

I just rebuilt the ry4an.org server, and as part of the migration I realized a still had a lot of very old, almost embarrassing content online. I took the broken or not-conceivably interesting stuff off-line and am serving up 410 GONE responses for it.

There exists, however, a broad swath of stuff that's not yet entirely useless, but is more than ten years old and not stuff I would likely post today. For all of these pages I've left the content up, but you first have to click through a modal dialog warning you you're looking at very old stuff I don't necessarily endorse. That pop up looks like this:

An example can be found here: https://ry4an.org/rrr/ . (Though, if anyone still wants to race from point to point in the twin cities during the worst of rush hour I still think it's an awesome idea.)

Being the sort of person that I am I automated the process of adding those warnings to anything that hasn't been modified in at least 10 years. So, if you got an ancient content warning when viewing this page: Hello 2021!

If you follow a link or bookmark to ry4an.org and you get a 404 Not Found, let me know. Everything should either still be there or should give a 410 GONE so you know it's not there on purpose.

Asynchronous Python Logging

The Python logging module has some nice built-in LogHandlers that do network IO, but I couldn't square with having HTTP POSTs and SMTP sends in web response threads. I didn't find an asynchronous logging wrapper, so I wrote a decorator of sorts using the really nifty monkey patching availble in python:

def patchAsyncEmit(handler):

base_emit = handler.emit

queue = Queue.Queue()

def loop():

while True:

record = queue.get(True) # blocks

try :

base_emit(record)

except: # not much you can do when your logger is broken

print sys.exc_info(

thread = threading.Thread(target=loop)

thread.daemon = True

thread.start(

def asyncEmit(record):

queue.put(record)

handler.emit = asyncEmit

return handler

In a more traditional OO language I'd do that with extension or a dynamic proxy, and in Scala I'd do it as a trait, but this saved me having to write delegates for all the other methods in LogHandler.

Did I miss this in the standard logging stuff, does everyone roll their own, or is everyone else okay doing remote logging in a web thread?

Graduation Form Letter

We just passed through another graduation season, and for the second year running I was able to get by with the same stack of form letters:

Dear _______________________________, My ( Congratulations | Condolences | ___________________ ) on your recent ( Graduation | Eagle Rank | Loss | ___________________ ). It is with ( Great Joy | a Heavy Heart ) that I received the news. I'm sure it took a lot of ( Hard Work | Cigarettes ) to make it happen. I'm sure you'll have a ( great time | good cry ) at the ( open house | wake ) and ( regret | am glad) that I ( can | cannot ) attend. As you move on to your next phase in life please remember: [ ] the importance of hard work [ ] the risks of smoking [ ] there are other fish in the sea [ ] don't have your mom send out your graduation invites -- you're an adult now [ ] ________________________________________ and the value of personal correspondence. Sincerely, your (Cousin | Scoutmaster | Parolee | _____________________), Ry4an Brase Enclosures (1): ( Check | Card | Gift | Best Wishes )

It's available as a Google Doc.

Scholars Walk Time Traveller

The University of Minnesota has a Scholar's Walk which celebrates great persons affiliated with the U and the awards they've won. One display labeled "Historical Giants" remains without any names. Since the U can't reasonably be anticipating any new history, I imagine that four years after installation there's still a committee somewhere arguing about which department gets more names. Not content to wait for committee I decided to add a historical giant of my own -- a time traveller.

The displays are stone boxes with two panes of glass. The outermost pane of glass extends a quarter inch on steel pegs and shows the University branding and the category information. The innermost pane is recessed three inches and contains the names of the honorees. I figured that the outermost glass would provide the necessary gloss, and that any way I could get names behind it and at the right depth would look okay.

I had vinyl lettering made with the wording I wanted and applied it to some laboriously-cut thin lexan. In tests I could I could bend the lexan ninety degrees with just a four inch radius. That flexibility was enough that I could slide the insert in the bottom of the display (the top was sealed against weather).

I showed up early one morning, slipped the insert into place between the two pieces of glass, and was very pleased with the result. The white lettering looked sufficiently etched once behind the shiny outer glass, the fonts and sizes matched nearby displays, and the borders of the lexan insert were nearly invisible. The text is easier to read in the big photo links below, but it lists a physicist with a dis-joint lifespan lauded "for her uniquely important contributions to the understanding of time travel".

Sadly, the insert was removed a few months later, and before someone who can actually take a decent picture got to it. Removing it probably meant disassembling the box to some extent as the very springy plastic insert wouldn't adopt the bend required to make it around the corner without significant coercion not applicable from out side the box.

Here are the bigger pics:

Homemade Tonic

I just made my third batch of tonic water from Mark Sexauer's Recipe:

- 4 cups water

- 4 cups sugar

- 1/4 cup cinchona bark

- 1/4 cup citric acid

- Zest and juice of 1 lime

- Zest and juice of 1 lemon

- Zest and juice of 1 orange

- 1 teaspoon coriander seeds

- 1 teaspoon dried bitter orange peel

- 10 dashes bitters

- 1 hand crushed juniper berry (I used two)

The flavor is excellent, but the process is terrible. Specifically, filtering the cinchona bark from the mixture after extracting the quinine (actually totaquine) from it is nearly impossible. It's so finely ground it clogs any filter, be it paper or the mesh on a french press coffeemaker, almost immediately. I've tried letting it settle and pouring off the liquid, forcing the liquid through with back pressure, and letting it drip all night -- none work well. A friend using the same recipe build a homemade vacuum extractor, but I've not yet gone that far.

This time I used multiple coffee filters and gravity, changing out the filter after each few tablespoons of water flowed through and the filter was plugged. I lost about half the liquid volume in the form of discarded, soggy filters. On previous batches I didn't take the liquid loss into account when adding sugar, yielding tonic water that was too sweet -- my primary complaint with store bought tonic --, but this time I halved the sugar as well and got a nice tart result. The fresh citrus and two juniper berries I use make it a perfect match for a nice gin. I'm able to carbonate the mixture with my own carbonation rig.

For the next batch I'm going to see if I can buy some quinine from the gray market online pharmacies. A single 300mg tablet, used to fight malaria or alleviate leg cramps, will make three strong liters and I'll be able to skip the filtering process entirely. The other benefit to using quinine instead of totaquine is aesthetic. The cinchona bark leaves my homemade tonic syrup brown and the water an unpleasant yellow color as you can see below.

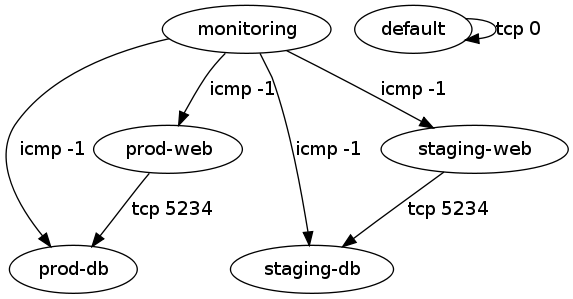

A Few Quick EC2 Security Group Migration Tools

Like half the internet I'm working on duplicating a setup from one Amazon EC2 availability zone to another. I couldn't find a quick way to do that if my stuff wasn't already described in Cloud Formation templates, so I put together a script that queries the security groups using ec2-describe-group and produces a shell script that re-creates them in a different region.

If all your ec2 command line tools and environment variables are set you can mirror us-east-1 to us-west-1 using:

ec2-describe-group | ./create-firewall-script.pl > create-firewall.sh ./create-firewall.sh

With non-demo security group data I ran into some group-to-group access grants whose purpose wasn't immediately obvious, so I put together a second script using graphviz to show the ALLOWs. A directed edge can be read as "can access".

That script can also be invoked as:

ec2-describe-group | ./visualize-security-groups.pl > groups.png

The labels on the edges can be made more detailed, but having each of tcp, udp, and icmp shown started to get excessive.

Both scripts and sample input and output are in the provided tarball.

reStructuredText Resume

I've had a resume in active maintenance since the mid 90s, and it's gone through many iterations. I started with a Word document (I didn't know any better). In the late 90s I moved to parallel Word, text, and HTML versions, all maintained separately, which drifted out of sync horribly. In 2010 I redid it in Google Docs using a template I found whose HTML hinted at a previous life in Word for OS X. That template had all sorts of class and style stuff in it that Google Docs couldn't actually edit/create, so I was back to hand-editing HTML and then using Google Docs to create a PDF version. I still had to keep the text version current separately, but at least I'd decided I didn't want any job that wanted a Word version.

When I decided to finally add my current job to my resume, even months after starting, I went for another overhaul. The goal was to have a single input file whose output provided HTML, text, and PDF representations. As I saw it that made the options: LaTeX, reStructuredText, or HTML.

I started down the road with LaTeX, and found some great templates, articles, and prior examples, but it felt like I was fighting with the tool to get acceptable output, and nothing was coming together on the plain text renderer front.

Next I turned to reStructuredText, and found it yielded a workable system. I started with Guillaume ChéreAu's blog post and template and used the regular docutils tool rst2html to generate the HTML versions. The normal route for turning reStructuredText into PDF using doctools passes through LaTeX, but I didn't want to go that route, so I used rst2pdf, which gets there directly. I counted the reStructuredText version as close-enough to text for that format.

Since now I was dealing entirely with a source file that compiled to generated outputs it only made sense to use a Makefile and keep everything in a Mercurial repository. That gives me the ability to easily track changes and to merge across multiple versions (different objectives) should the need arise. With the Makefile and Mercurial in place I was able to add an automated version string/link to the resume so I can tell from a print out which version someone lis looking at. Since I've always used a source control repository for the HTML version it's possible to compare revisions back to 2001, which get pretty silly.

I'm also proud to say that the URL for my resume hasn't changed since 1996, so any printed version ever includes a link to the most current one. Here are links to each of those formats: HTML, PDF, text, and repository, where the text version is the one from which the others are created.

Automatic SSH Tunnel Home As Securely As I Can

After watching a video from Defcon 18 and seeing a tweet from Steve Losh I decided to finally set up an automatic SSH tunnel from my home server to my traveling machines. The idea being that if I leave the machine somewhere or it's taken I can get in remotely and wipe it or take photos with the camera. There are plenty of commercial software packages that will do something like this for Windows, Mac, and Linux, and the highly-regarded, open-source prey, but they all either rely on 3rd party service or have a lot more than a simple back-tunnel.

I was able to cobble together an automatic back-connect from laptop to server using standard tools and a lot of careful configuration. Here's my write up, mostly so I can do it again the next time I get a new laptop.

BoingBoing Posts in Rogue

Previously I mentioned I was importing the full corpus of BoingBoing posts into MonogoDB, which went off without a hitch. The import was just to provide a decent dataset for trying out Rogue, the Mongo searching DSL from the folks at Foursquare. Last weekend I was in New York for the Northeast Scala Symposium and the Foursquare Hackathon, so I took the opportunity finish up the query part while I had their developers around to answer questions.

Loading BoingBoing into MongoDB with Scala

I want to play around with Rogue by the Foursquare folks, but first I needed a decent sized collections of items in a MongoDB. I recalled that BoingBoing had just released all their posts in a single file, so I downloaded that and put together a little Scala to convert from XML to JSON. The built-in XML support in Scala and the excellent lift-json DSL turned the whole thing into no work at all:

Syntax Highlighting and Formulas for Blohg

I'm thus far thrilled with blohg as a blogging platform. I've got a large post I'm finishing up now with quite a few snippets of source code in two different programming languages. I was hoping to use the excellent SyntaxHighlighter javascript library to prettify those snippets, and was surprised to find that docutils reStructuredText doesn't yet do that (though some other implementations do).

Fortunately, adding new rendering directives to reStructuredText is incredibly easy. I was able to add support for a .. code mode with just this little bit of Python:

Blacklisting Changesets in Mercurial

Distributed version control systems have revolutionized how software teams work, by making merges no longer scary. Developers can work on a feature in relative isolation, pulling in new changes on their schedule, and providing results back on their (manager's) timeline.

Sometimes, however, a developer working in their own branch can do something really silly, like commit a huge file without realizing it. Only after they push to the central repository does the giant size of the changeset become known. If one catches it quickly, one just removes the changeset and all is will.

If other developers have pulled that giant changeset you're in a slightly harder spot. You can remote it from your repository and ask other developers to do the same, but you can't force them to do so. Unwanted changesets let loose in a development group have a way of getting pushed back into a shared repository again and again.

To ban the pushing of a specific changeset to a Mercurial repository one can use this terse hook in the repository's .hg/hgrc file:

[hooks] pretxnchangegroup.ban1 = ! hg id -r d2cfe91d2837+ /dev/null 2>&1

Where d2cfe91d2837 is the node id of the forbidden changeset.

That's fine for a single changeset, but if you more than a few to ban this form avoids having a hook per changeset:

[hooks]

pretxnchangegroup.ban = ! hg log --template '{node|short}\n' \

-r $HG_NODE:tip | grep -q -x -F -f /path/to/banned

where banned /path/to/banned is a file of disallowed changesets like:

acd69df118ab 417f3c27983b cc4e13c92dfa 6747d4a5c45d

It's probably prohibitively hard to ban changesets in everyone's repositories, but at least you can set up a filter on shared repositories and publicly shame anyone who pushes them.

Switching Blogging Software

This blog started out called the unblog back when blog was a new-ish term and I thought it was silly. I'd been on mailing lists like fork and Kragan Sitaker's tol for years and couldn't see a difference between those and blogs. I set up some mailing list archive software to look like a blog and called it a day.

Years later that platform was aging, and wikis were still a new and exciting concept, so I built a blog around a wiki. The ease of online editing was nice, though readers never took to wiki-as-comments like I hoped. It worked well enough for a good many years, but I kept having a hard time finding my own posts in Google. Various SEO-blocking strategies Google employs that I hope never to have to understand were pushing my entries below total crap.

Now, I've switched to blohg as a blogging platform. It's based on Mercurial my version control system of choice and has a great local-test and push to publish setup. It uses ReStructured-Text which is what wiki text became and reads great as source or renders to HTML. Thanks to Rafael Martins for the great software, templates, and help.

The hardest part of the whole setup was keeping every URL I've ever used internally for this blog still valid. URLs that "go dead" are a huge pet peeve of mine. Major, should-know-better sites do this all the time. The new web team brings up brand new site, and every URL you'd bookmarked either goes to a 404 page or to the main page. URLs are supposed to be durable, and while it's sometimes a lot of work to keep that promise it's worth it.

In migrating this site I took a couple of steps to make sure URLs stayed valid. I wrote a quick script to go through the HTTP access logs site for the last few months, looked for every URL that got a non-404 response, and turned them into web requests and made sure that I had all the redirects in place to make sure the old URLs yielded the same content on the staging site. I did the same essential procedure when I switched from mailing list to wiki so I had to re-aim all those redirects too. Finally, I ran a web spider against the staging site to make sure it had no broken internal links. Which is all to say, if you're careful you can totally redo your site without breaking people's bookmarks and search results -- please let me know if you find a broken one.

Mercurial Remote Test Runner via Push

I heard someone in IRC saying that the mercurial test suite was bogging down theirlaptop, so I set up a quick push-test service for the mercurial crew. If you're in crew and you do a push to ssh://hgtester@ry4an.org:2222/ these steps will be taken:

- a local clone of the crew repo is updated from intevention.de

- a new, disposable local clone is created from that crew clone

- your csets are pushed to that new clone

- the working directory is updated to 'tip'

- a build is done

- the test suite is run

- the build and results show up in your stdout

- the new clone (and your pushed csets) are deleted

It's on a reasonably fast, unloaded box so the test suite runs in about 3 mins 30 seconds. Thanks to ThomasAH for providing the crew pubkeys. If you're not in crew and want to use the service please contact me and convince me you're not going to write a test that does a "rm -rf ~", because that would completely work.

Unfortunately, the output is getting buffered somewhere so there's no output after "searching for changes" for almost 4 minutes, but the final output looks as attached.

The machine's RSA host key fingerprint is: ac:81:ac:0b:47:f4:20:a1:4d:7e:6a:c5:62:ba:62:be. (updated 2010/06/07)

The scripts can be viewed here: http://bitbucket.org/Ry4an/hgtester/

If all that was jibberish, we now return you to your regularly scheduled silence.

Remote Repository Creation for Mercurial Over HTTP

I park in the #mercurial IRC channel a lot to answer the easy questions, and on that comes up often is, "How can I create a remote repository over HTTP?". The answer is: "You can't.".

Mercurial allows you to create a repository remotely using ssh with a command line like this:

hg clone localrepo ssh://host//abs/path

but there's no way to do that over HTTP using either hg serve or hgweb behind Apache.

I kept telling people it would be a very easy CGI to write, so a few months back I put my time where my mouth was and did it.:

#!/bin/sh echo -n -e "Content-Type: text/plain\n\n" mkdir -p /my/repos/$PATH_INFO cd /my/repos/$PATH_INFO hg init

That gets saved in unsafecreate.cgi and pointed to by Apache like this:

ScriptAlias /unsafecreate /path/to/unsafecreate.cgi

and you can remotely invoke it like this:

http://your-poorly-admined-host.com/unsafecreate/path/to/new/repo

That's littered with warning about its lack of safety and bad administrative practices because you're pretty much begging for someone to do this:

http://your-poorly-admined-host.com/unsafecreate/something%3Brm%20-rf%20

Which is not going to be pretty, but on a LAN maybe it's a risk you can live with. Me? I just use ssh.

At the time I first suggested this someone chimed in with a cleaned up version in the original pastie, but it's no safer.

Grand Central Direct Dialer

I'm a huge fan of Grand Central's call screening features. It's irksome, however, that they make it hard to dial outward -- sending your GC number instead of your cell number as the caller id. To do so you need to first add the target number to your address book, and often I'm calling someone I don't intend to call again often.

I started scripting up a way around that when I saw someone named Stewart already had.

I wanted to be able to easily dial outbound from my cellphone, so I created a mobile friendly web form around his script. The script requires info you should never give out (username, password, etc.), so you should really download the script and run it on your own webserver.

It also generates a bookmarklet you can drag to your browser's toolbar that will automatically dial any selected/highlighted phone number from your GC Number.

Comments

Only to save someone else the time: The iPhone app, Grand Dialer, does the same thing from an iPhone. Everyone says it's excellent.

Sasha Megan Bauer Brase

On July 29th, Kate gave birth to Sasha Megan Bauer Brase. Details and photos are on her site.

Comments

Is the Jerry Farber piece, an anthem of my youth, not copyrighted? Do you have permission? Where can I reach Farber? -- Some Random Person

Presumably you're referring to this, though I've no idea why you attached the comment to my daughter's birth announcement. I typed this from a blurry photocopy twenty years ago. If the copyright holder objects, I'll happily remove it.

Home Carbonator

Last year I read about home carbonation, and looking at the amount of club soda Kate and I buy it made sense. The only unknown was where to put the ugly tank that would be out of sight yet still convenient to use.

Months later coworkers and I were at the Red Stag, which carbonates their own sparkling water, and talked about doing the same at the office. I still didn't act until a friend got a soda club machine as a gift.

This weekend I (or actually Kate since I was running late) went to Northern Brewer and picked up parts K003, KX03, and K026 to build the setup below. It really does work as easy as the first article promises, and the price including the purchase of a tank came to $200 total. So far the best thing we've carbonated was orange juice, but I'm looking to try some fruit purees soon.

Misc. Projects Including A Baby

To look at this long neglected unblog one would thing I've stopped doing things, but quite the contrary there's been so very much doing of things that there's been no time for posting. In no particular order we have:

- Installed a home security system -- No particular need, but I've always enjoyed alarms and now our home has an RSS feed

- Installed an electric garage door opener -- No more brushing off the car in the morning after a snow. Granted it's still powered by an extension cord running from the basement, but hey so goes it.

- Installed nifty iButton electronic locks -- Now the same key opens every door to which I've got access including the Swarmcast offices.

- Un-finshed the basement -- wool insulation and moisture: a winning combination. The project included a fun trip to the city trash transfer station.

- Remodeled the kitchen -- I did almost no actual labor on this excepting some tile installation with Kate, the adding of rolley shelves in the pantry, and having to eat out for four straight months.

Add to those minor projects some time spent on general upkeep of an 85 year old home, scouts and a decidedly non-zero number of hours spent at work, and it becomes clear that what Kate and I need is a baby.

Kate's due on July 28th, and we're very excited.

Comments

Mazel tov on the baby news!

I happy to see that people from past who I have not kept up with in such a long time are living enjoyable and exciting lives!

-Mark Reck

You know how I have a strong distaste for breeding, and the products of breeding, but I suppose my stance has softened a little since several friends have produced, as far as I can tell, all together not terrible offspring. It's fun to prod at their ill proportioned chubby bodies for a while at least. So now I may offer my sincere congratulations on the upcoming baby. -Grrrk

Autobahn Accelerator for iTunes

My company, Swarmcast, announced one of our first public releases today. Previously we've been primary selling to content providers, but now we're putting out a user facing free release. If you download our Autobahn Accelerator for iTunes you'll find your purchases from the iTunes music store come down three to ten times faster than they did before. We'll be adding support for lots of other sites (you tube, etc.) in upcoming weeks.

Sadly we've got a MacOS version done, but the installation was deemed too clumsy for the polished Mac experience, so we'll have to wait a few weeks to get that out. Windows only for now (says this Linux user).

Customer Service Call Log

Between telecom troubles, warranty repairs, botched on line orders, and marriage related changes in insurance, mortgage, and bank accounts I've spent a lot of time on the phone with customer service representatives lately. Few issues get resolved in a single call and even fewer without a transfer to another office.

I put together a sheet to keep track of who I spoke to, when, how to get back to them, and what they promised me. Now I grab one whenever I'm about to dial a 1-800 number to talk to the almost-friendly, nearly-helpful people on the other end. Besides the convenience of being able to say "On January 21st at 3pm Janice, CSR number JA5692, told me she'd ship the replacement FedEx overnight," representatives seem on their best behavior when you start out every interaction asking for their name and customer representative number.

View Any Simon Delivers Order

I forwarded a Simon Delivers order receipt email on to a friend, and he was able to view the order without being logged in as me. Turns out that if you have a Simon Delivers account at all they let you view any order. I created a quick web form to let anyone view any order using my account. Here's my favorite order so far:

| Qty | Item Name | Each |

| 1 | Cetaphil Moisturizing Lotion | $10.99 |

| 2 | Coke Diet - 24/12 oz. Cans | $7.49 |

| 2 | Dr Pepper Diet - 24/12 oz. Cans | $7.49 |

| 2 | Hershey's Milk Chocolate Candy Bars - 6 ct. | $3.49 |

| 2 | Life Savers Wintergreen Flavored - Individually Wrapped - Bag | $1.89 |

| 1 | Nabisco Nutter Butter Peanut Butter Sandwich Cookies | $3.79 |

| 1 | Nestea Cool Lemon Iced Tea Fridge Pack - 12/12 oz. Cans | $4.19 |

| 2 | Pepsi - 24/12 oz. Cans | $7.49 |

| 2 | Pepsi 8/12 oz. Bottles | $3.69 |

| 1 | Seven-Up - 12/12 oz. Cans | $4.19 |

| 2 | Seven-Up Diet - 12/12 oz. Cans | $4.19 |

I'm sure fixing this problem is simple as adding whatever the .asp equivalent of this is:

if (currentUser != order.user) {

return;

}

Funny, though.

If you try you own and stumble across any funny ones put the order number in the comments.

Comments

3885593 - Five boxes of cereal and two gallons of milk. -- Nick

2566520 - 15 gallons of bottled water, Milk Bones, and an issue of Minnesota Parent magazine. -- Dan

Alarm System

My favorite book in the Wren Hollow Elementary school library was The Gadget Book by Harvey Weiss. I must have checked it out a hundred times during the second and third grade and tried to build most of the half-practical projects it detailed. The best among them was the burglar alarm. It used wooden blocks, a door hinge, and a strip of metal to make a simple normally-open contact switch. It was the first electrical work I ever did and almost certainly shaped my interests and career path.

As a winter (read: indoor) project I decided to install a security system. Our system at the office uses DSC components and works well enough, so I used the same. I bought a Power 632 panel on line along with some wired and wireless contact switches, and keypad. The only difficultly during installation was routing the wire for the keypad from upstairs to downstairs where it couldn't be seen. Programming was nothing like modern computer programming. Bits and bytes were entered directly into numbered memory registers by toggling boolean flags and entering hex characters on the keypad. It was oddly fun.

Everything's working quite well. We've got a bevy of contact, motion, and temperature sensors. We can arm/disarm from the keypad or using the wireless remote keys on our key chains. For monitoring I went with next alarm and they even make an RSS feed available (though only through yahoo, so I had to fake the User-Agent: HTTP header);

Trash Can Snorkel

This one's dumb. We've got the same trash can that everyone who shops at Target has. The inner removable pail is handy for keeping spills from pouring out the foot pedal hole, but its air-tight nature creates quite the vacuum when you're trying to pull the bag out.

After ripping the handles off yet another Glad bag trying to get it out of the pail I went to get a drill to poke an air hole in the bottom -- leak proof be damned. Next to the drill I saw a piece of 3/4" plastic tubing, which I ran from the top of the inner pail to the bottom.

|https://ry4an.org/pictures/web/cimg0280|

After a trash day that left the bag handles intact I can report that the hose allows air in without requiring new holes. Future trash pails should have top to bottom air ducts molded into them. Trashcan manufacturers please to be getting on that right now.

Whole House Humidifier

This weekend I put in a Honeywell 360A whole house humidifier. The instructions said it should take an hour, and it only took me four. Nothing went wrong, which what you hope for when a project means cutting holes in your duct work, tapping into your water, and some wiring. Now when we wake up our throats don't hurt.

Comments

Update: If you don't tighten down the compression fittings on the water supply line it will let go and you'll drain water into the floor drain all night. d'oh

From a concerned internet'er Get rid of that saddle valve at your earliest convenience, those things a prone to leaking or letting go. Have someone put in a tap on the line with a proper cutoff with a 1/4"FIP. Same connector you would use for an icemaker. To be real safe after you do that you can buy a braided steel icemaker line that will connect inbetween the cutoff and the humidifier, so no compression connectors anymore either.

Home Repair and Misc.

When I don't post here in a while it either means I'm not building anything new or that I'm too busy to write about what I am doing. This time it's the later. Not that any of it's been exciting, but almost all of it involved using a saw, which totally counts.

Gwin, our eldest cat, has always kicked toys into the basement sump for the joy of watching humans pick them out later. Milo, on the other hand, likes running into the muddy sump and then running up stairs. To keep the cats and their toys out I built a little wooden frame to fit and covered it with chicken wire. It's ugly but functional.

|https://ry4an.org/pictures/web/Sump|

At some point during Monday night's storm a 20' branch fell from the sky and broke our fence gate. Neither of us woke up. Sometimes I park my car right where it landed, and I'm glad Monday wasn't one of those times. Repair was just a matter of replacing a few pickets and fixing the latch. The latch has never worked well and still doesn't, but it's slightly better, which I keep telling Kate counts as fixing it.

|https://ry4an.org/pictures/web/Gate|

Meager construction efforts aside I've been working on some big things at work and on our [http://kateandry4an.org/gallery/invitation wedding invitations], which we hope to mail in the next week or two.

Meager Home Improvements

After moving into the house I started a series of small home improvement tasks. Some of them have genuine safety reasons but many happened only because changing things demonstrates residence. Here's an incomplete list of things I've done:

- added a ceiling fan to the bedroom

- rewired the doorbell with modern wire so it doesn't ring everytime you walk past the dining room heat register

- added shelving, a phone jack and power outlets to create a server corner

- added appliance-grade outlets behind the stove and fridge (rather than the ungrounded lamp-grade extension cords running through holes in the floor they previously had)

- added a motion light to the break-in-ariffic back yard

- cleaned out the gutters (I knew there's a reason I got that condo)

- replaced the rotting wiring for the basement lighting

|https://ry4an.org/pictures/web/datacenter| |https://ry4an.org/pictures/web/motionlight|

Display Google Calendars with PHP iCalendar

Google has a new calendar service, and it's great. I really try to avoid hosted data solutions, but this one's just too good to pass up. My one gripe is that there's no easy way for non google calendar users to view the calendars. They're available live as both ical and rss/xml files, but the average home web user doesn't know what to do with either of those.

There are plenty of services out there that will display an ical file as a web page, but none of them I tested rendered the google ical output well, and all of them were packed with ads. Previously, I'd used software called phpicalendar to display ical files created by my old calendaring solution on the web, so I started there. It didn't parse the google output well either. However, with a little tweaking (see the patch in the zip file below) and some Apache trickery (see the README in the zip file) I can now get good phpicalendar output from google.

google-calendar-phpicalendar-2.22.zip

Update: Looks like now google offers a good way to do this.

Comments

Hey man I really want to get my php iCalendar working with my new Google Calendar as you have, but my server is not a Linux box, so I don't have a good way to patch the diff file you included in the zip. I was wondering if you would be willing to upload the actual files that you changed, or would you be willing to email them to me. I would really appreciate it.

Hrm, not to be unhelpful, but if you read the patch file you'll see I just commented out one block and added a simple if test somewhere else. It should be very easy to do by hand on the two files. The unified diff format is nice in that it's quite human readable despite being ready for machine processing. -- Ry4an

I'm not familiar with php icalendar, but (stupid question...) if using your work around, and I keep making new events in the google calendar, will they show up in the icalendar, or will some kind of cron job be required? (maybe I should just use the icalendar... but the google site is so seductive....) --Rebecca, cookieshouse.com

Yes, my phpicalendar hack does a live display of the google data. One could use phpicalendar all by itself, but I like the invites, access controls, and UI from google calendar well enough that I though it was worth trying to have phpicalendar do a live display of data I keep in google calendar. -- Ry4an

I was all excited to work on this little project. Then I realized I don't have the ability to apply patches (or if I do, I haven't a clue how to). Thanks for sharing though, it looks super cool on your site! --Rebecca, cookieshouse.com

I could not get your method to work so I had to rework the ical_parser.php. I recreated the $cal_filelist array with my google calendar urls. Then so the names of the calendars were not "basic" I created another array called $cal_names. Here maybe some source code will make this more clear. At about line 102 of ical_parser.php

$cal_filelist = array ("http://calendar url 1", "http://calendar url 2");

$cal_names = array ("Calendar Name 1","Calendar Name 2");

$counter = 0;

foreach ($cal_filelist as $cal_key=>$filename) {

// Find the real name of the calendar.

//$actual_calname = getCalendarName($filename); original code commented out

$actual_calname = $cal_names[$counter];

$counter++;

}

-- Psycho Whale

That's a more general solution than my quick hack. You might want to submit your code changes back to the phpicalendar project using their patch tracker. I'm sure they're getting all sorts of "support google calendar" requests and yours is a good step toward that. -- Ry4an

I applied your patch... no problem. But when I did the .htaccess edit, it would not redirect the .ics file to the google calendar. I then edited the config.inc.php to allow webcal's and added the exact patch of the .ics (which would be redirected) in the "$list_webcals[] = *;" area. No dice. It would then give me an error (which is strange since the file actually existed in that spot). Any idea what I'm doing wrong? Do I need to edit the "$default_path" in the config.inc.php to show the patch to the redirected .ics file also? I'd love to get this thing to work but doesn't seem to be happening. Tried Pycho Whale's solution also but that worked even less. Not sure if he was editing the ical_parser.php before or after your patch or if that even was relevent. Lot's a questions. Any help? -- RSmith423

If the .htacces file is ignoring your Redirect line it's because your httpd.conf file isn't set to allow Redirect lines in .htaccess files. You can either edit httpd.conf to allow Redirect lines in .htaccess files or you can just put the Redirect line directly into the httpd.conf file. Instructions for both can be found in the Apache online help. -- Ry4an

I noticed that recurring events don't display correctly. If you have a recurring event the start time and end time is always the same.

Here is my hack to PHP iCalendar to make it work:

in ical_parser.php:

my code:

ereg('^PT([0-9]+)S', $data, $duration);

$the_duration = $duration[1];

replaces this original code:

ereg ('^P([0-9]{1,2}[W])?([0-9]{1,2}[D])?([T]{0,1})?([0-9]{1,2}[H])?\([0-9]{1,2}[M])?([0-9]{1,2}[S])?', $data, $duration);

$weeks = str_replace('W', '', $duration[1]);

$days = str_replace('D', '', $duration[2]);

$hours = str_replace('H', '', $duration[4]);

$minutes = str_replace('M', '', $duration[5]);

$seconds = str_replace('S', '', $duration[6]);

$the_duration = ($weeks * 60 * 60 * 24 * 7) + ($days * 60 * 60 * 2\4) + ($hours * 60 * 60) + ($minutes * 60) + ($seconds);

Apparently Google uses seconds to specify the duration of the event, but PHP iCalendar expects the duration in hour minute second format.

Thanks for the patch!

-Charles

The only thing I had to do to get GoogleCalendar to work was the following:

phpicalendar/config.inc.php: $allow_webcals = 'yes'; phpicalendar/config.inc.php: $timezone = 'Europe/Paris'; php.ini: allow_url_fopen = On

And it worked right out of the box ...*

http:// YOUR-SITE /phpicalendar/month.php?cal=http://www.google.com/calendar/ical/ YOUR-GMAIL /public/basic&getdate=20060518

Thomas.

Excellent, maybe they've updated. I kept having it refuse to display any webcal URL that didn't end in '.ics', pehaps that's been fixed. Also, I found I needed to add some link text to the blank free/busy view entries for them to be clickable, but that would only be required if you use the free/busy (rather than full detail) view gcalendar provides. --* Ry4an

Fixing the Roomba Circle Dance

My Roomba had been on the fritz lately. When I powered it on it went forward a few inches and then started backing up in a tight circle. I figured it was a dirty sensor, but I cleaned everything I could see and had no luck.

My coworker Brandon pointed me to the Circle Dance website, which explains how a dirty internal sensor can cause just that problem. I've got an older Roomba, but the wheel assembly seemed the same. The site has great instructions and photos showing how one can fix the problem. They do, however, go through incredible contortions, including removing 10 screws and a hard to replace panel, just to remove a single screw.

I found I could skip all that by drilling a small hole in the fender rather than removing it. Given that replacing the fender is so difficult the original site recommends not bothering, I think a hole is an acceptable level of resulting cosmetic defect.

This image shows just where the hole was made:

...and now the Roomba works great again.

Improving Nick Tracking using String Similarity

Years back I wrote an IRC nick tracking script. It's served me well since then, but it has one major annoyance. When people changed their name slightly it would remember that name change, even though the old/new mapping didn't contain any real identity change information.

For example, when Gabe_ became Gabe it would display every message from him as <Gabe_(Gabe)>. That doesn't tell me anything interesting about who Gabe is.

I decided to tweak the tracker to ignore small changes in names. Computers don't think in terms like small they need a way to quantify difference and then see if it exceeds a specified threshold. Fortunately, lots of people have worked on just that problem -- mostly so that spell checkers can present you with a list that's close to the non-word you typed.

When I've worked with close enough strings in the past I've used the Levenshtein_distance as implemented in the String::Approx module or the ancient Soundex algorithm. This time, however, I tried out the String::Trigram module as written by Tarek Ahmed, which implements the method proposed by Angell in this paper. Here's an explanation from String::Trigram's README file:

This consists of splitting some string into triples of

characters and comparing those to the trigrams of some other string. For

example the string kangaroo has the trigrams "{kan ang nga gar aro

roo}". A wrongly typed kanagaroo has the trigrams "{kan ana nag aga gar

aro roo}". To compute the similarity we divide the number of matching

trigrams (tokens not types) by the number of all trigrams (types not

tokens). For our example this means dividing 4 / 9 resulting in 0.44.

Thus far, at a 50% match threshold it's never failed to detect a real change or ignore a minor-change, and if it does I should just be able to notch the match-threshold higher or lower. Great stuff.

The modified script can be viewed here and downloaded here.

Comments

If you wanted to only track nick changes in certain channels you'd add code line this at line 86:

return unless grep /^$chan$/, qw(#channelone #channeltwo #channel3);

I've modified 1.1 with a new /function, trackchan, that allows one to manage a list of channels where they want nick tracking to take place. If the list is empty, tracking will be done in all channels. The following is a unified diff.

What it doesn't do:

- Check to make sure that the channel you're passing in actually conforms to any standard channel naming conventions.

- Check to see if the channel already exists in the list before trying to remove it (though thanks to it just being a simple grep, no errors is returned in any case).

- Check to see if you're adding a duplicate channel to the list (feel free, it doesn't affect the functionality one bit).

- Have an option for printing the channel list. I think I will modify it to just print the channel list in addition to the usage if /trackchan is called with no arguments.

-- Gabe

--- nick-track.pl.orig Thu Dec 22 10:37:34 2005

+++ nick-track.pl.trackchan Thu Dec 22 14:50:30 2005

@@ -22,7 +22,7 @@

use Irssi;

use strict;

use String::Trigram;

-use vars qw($VERSION %IRSSI %MAP);

+use vars qw($VERSION %IRSSI %MAP @CHANNELS);

$VERSION = "1.1";

%IRSSI = (

@@ -47,6 +47,7 @@

'Asrael' => 'Sammi',

'Cordelia' => 'Sammi',

);

+@CHANNELS = qw();

sub call_cmd {

my ($data, $server, $witem) = @_;

@@ -84,6 +85,13 @@

my ($chan, $nick_rec, $old_nick) = @_;

my $nick = $nick_rec->{'nick'};

+ # If channel list is empty, track for all channels.

+ # If channel list is non-empty, track only for channels in list.

+ my $channels = @CHANNELS;

+ if ($channels > 0) {

+ return unless grep /^$chan$/, @CHANNELS;

+ }

+

if (defined $MAP{$old_nick}) { # if a previous mappings exists

if (String::Trigram::compare($nick, $MAP{$old_nick},

warp => 1.8,

@@ -101,6 +109,34 @@

}

}

}

+

+sub trackchan_cmd {

+ my ($data, $server, $witem) = @_;

+ my ($cmd, $channel) = split ' ', $data;

+ my @cmds = qw(add del);

+

+ unless (defined $cmd && defined $channel && map($cmd, @cmds)) {

+ print "Usage: /trackchan [add|del] #channel";

+ return;

+ }

+

+ if ($cmd eq 'add') {

+ push @CHANNELS, $channel;

+ print "$channel added to channel list";

+ }

+

+ if ($cmd eq 'del') {

+ @CHANNELS = grep(!/^$channel$/, @CHANNELS);

+ print "$channel removed from channel list";

+ }

+

+ print "Current channel list:";

+ foreach my $channel (@CHANNELS) {

+ print " $channel";

+ }

+}

+

+Irssi::command_bind trackchan => \&trackchan_cmd;

Irssi::signal_add("message public", \&rewrite);

Irssi::signal_add("nicklist changed", \&nick_change);

Thanks, Dopp, great stuff! -- Ry4an

Linux on the Dell X1

Yesterday I got the warranty replacement machine for my (company's) Dell X300 laptop. Dell mailed me an X1, which seems a nice enough machine. It meets my firm criteria: under 3 lbs and thinner than an inch. If Apple would hit those numbers I'd be there in a second.

Unfortunately, it looks like getting Linux on to this thing is going to be a pain. Emperor Linux will sell an X1 with Linux pre-installed, but they want $450 to take the X1 I already "own" and put Linux on to it. If they're not able to simply mirror a debugged installation over, that says a lot about their volume. I value my time pretty highly, but $450 for a software install seems extreme.

Fortunately there are plenty of pages detailing how to get Linux running on the X1. I'll muddle through the process and attach my notes as comments.

Comments